-

Notifications

You must be signed in to change notification settings - Fork 6k

Using a Custom TensorFlow Model with Blocks

The Blocks Development Tool includes a sample op mode that demonstrates how a user can load a custom inference model. In this example, we will load a TensorFlow inference model from a prior FIRST Tech Challenge season.

The Robot Controller allows you to load an inference model that is in the form of a TensorFlow Lite (.tflite) file. If you are a very advanced user, you can use Google's TensorFlow Object Detection API to create your own custom inference model. In this example, however, we will use a .tflite file from a prior season (Skystone).

The inference model is available on GitHub at the following link:

Press the "Download" button to download the file from GitHub to your local device.

Press the Download button to download the .tflite file.

Once you have the file downloaded to your laptop, you need to upload it to the Robot Controller. Connect your laptop to your Robot Controller's wireless network and navigate to the "Manage" page of the system:

Connect to the Robot Controller's wireless network and navigate to the Manage page.

Scroll towards the bottom of the screen, and click on the "Select TensorflowLite Model File" button to open a dialog box that you can use to select your .tflite file.

Press the "Select TensorflowLite Model File" button.

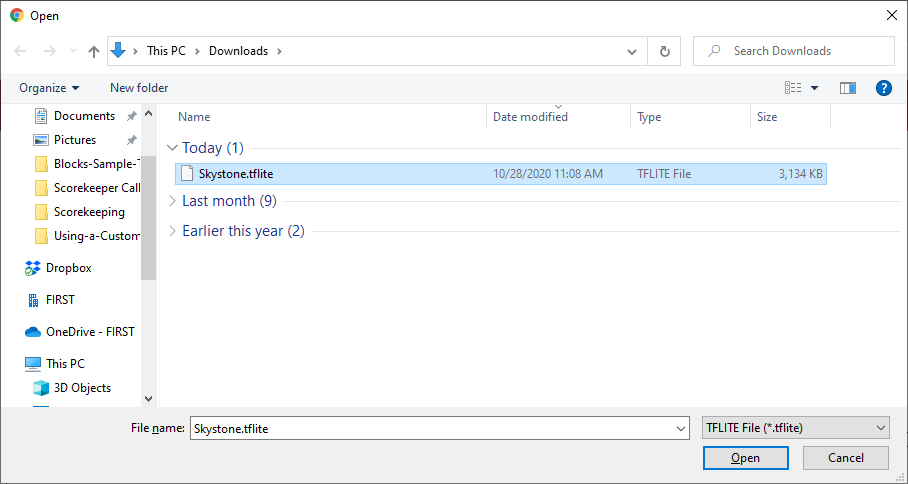

Select and then upload your .tflite file using the dialog box.

Use the dialog box to select and upload your .tflite file.

-

TensorFlow 2023-2024