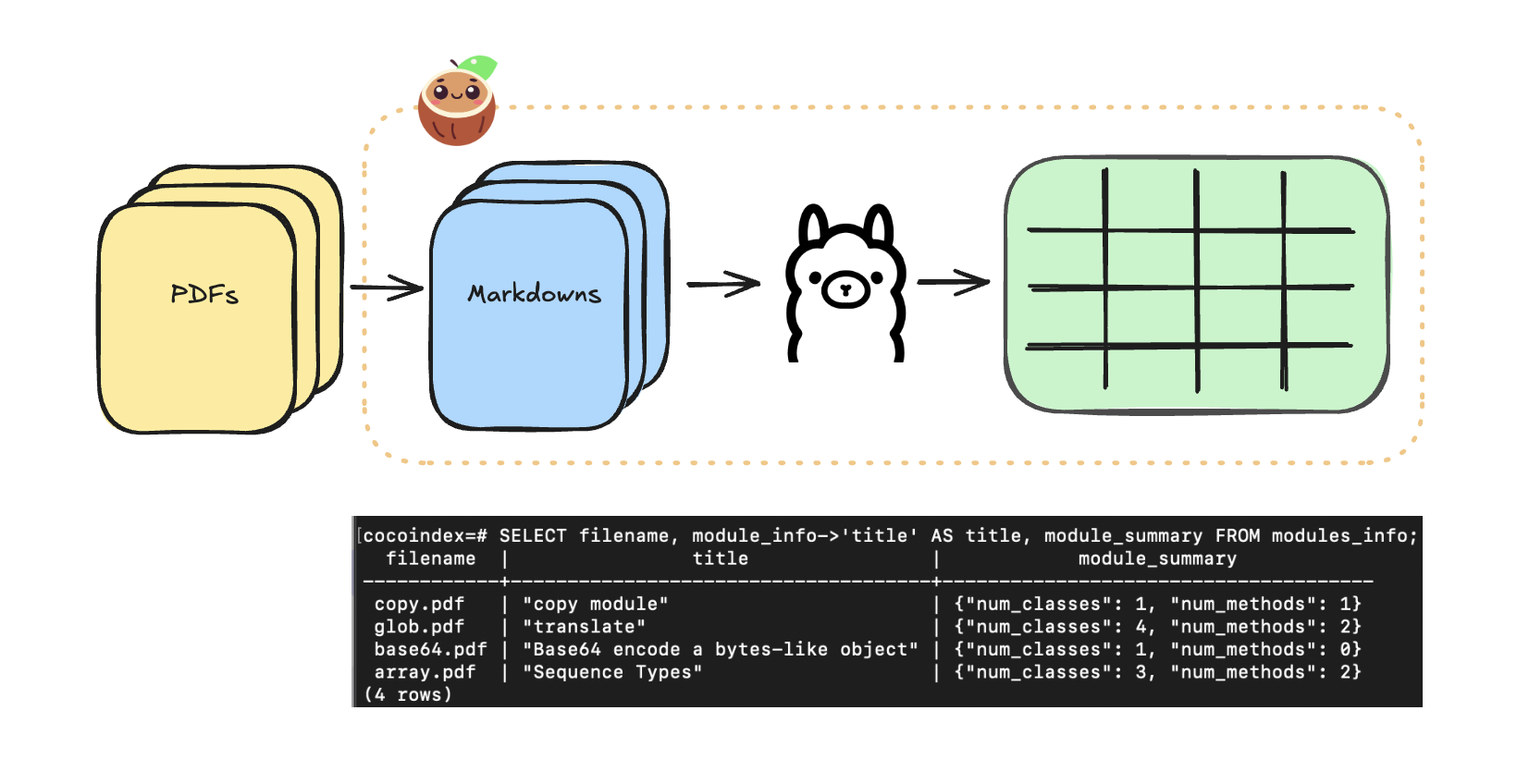

In this example, we

- Converts PDFs (generated from a few Python docs) into Markdown.

- Extract structured information from the Markdown using LLM.

- Use a custom function to further extract information from the structured output.

Please give Cocoindex on Github a star to support us if you like our work. Thank you so much with a warm coconut hug 🥥🤗.

Before running the example, you need to:

- Install Postgres if you don't have one.

- Install / configure LLM API. In this example we use Ollama, which runs LLM model locally. You need to get it ready following this guide. Alternatively, you can also follow the comments in source code to switch to OpenAI, and configure OpenAI API key before running the example.

Install dependencies:

pip install -e .Setup:

python main.py cocoindex setupUpdate index:

python main.py cocoindex updateAfter index is build, you have a table with name modules_info. You can query it any time, e.g. start a Postgres shell:

psql postgres://cocoindex:cocoindex@localhost/cocoindexAnd run the SQL query:

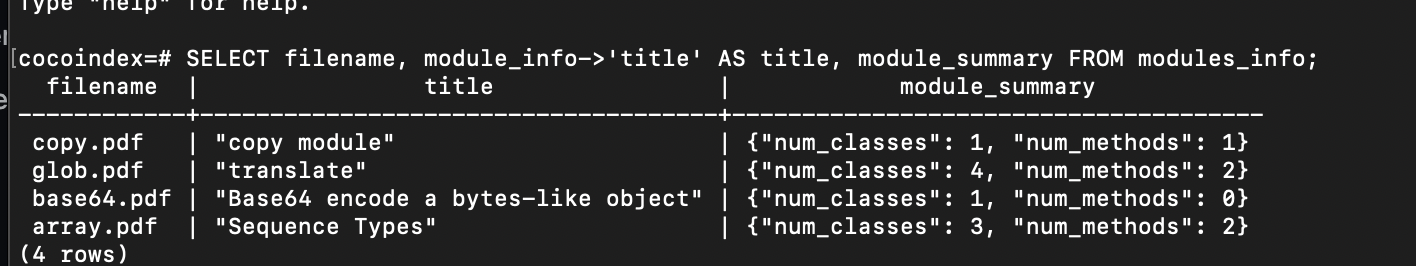

SELECT filename, module_info->'title' AS title, module_summary FROM modules_info;You should see results like:

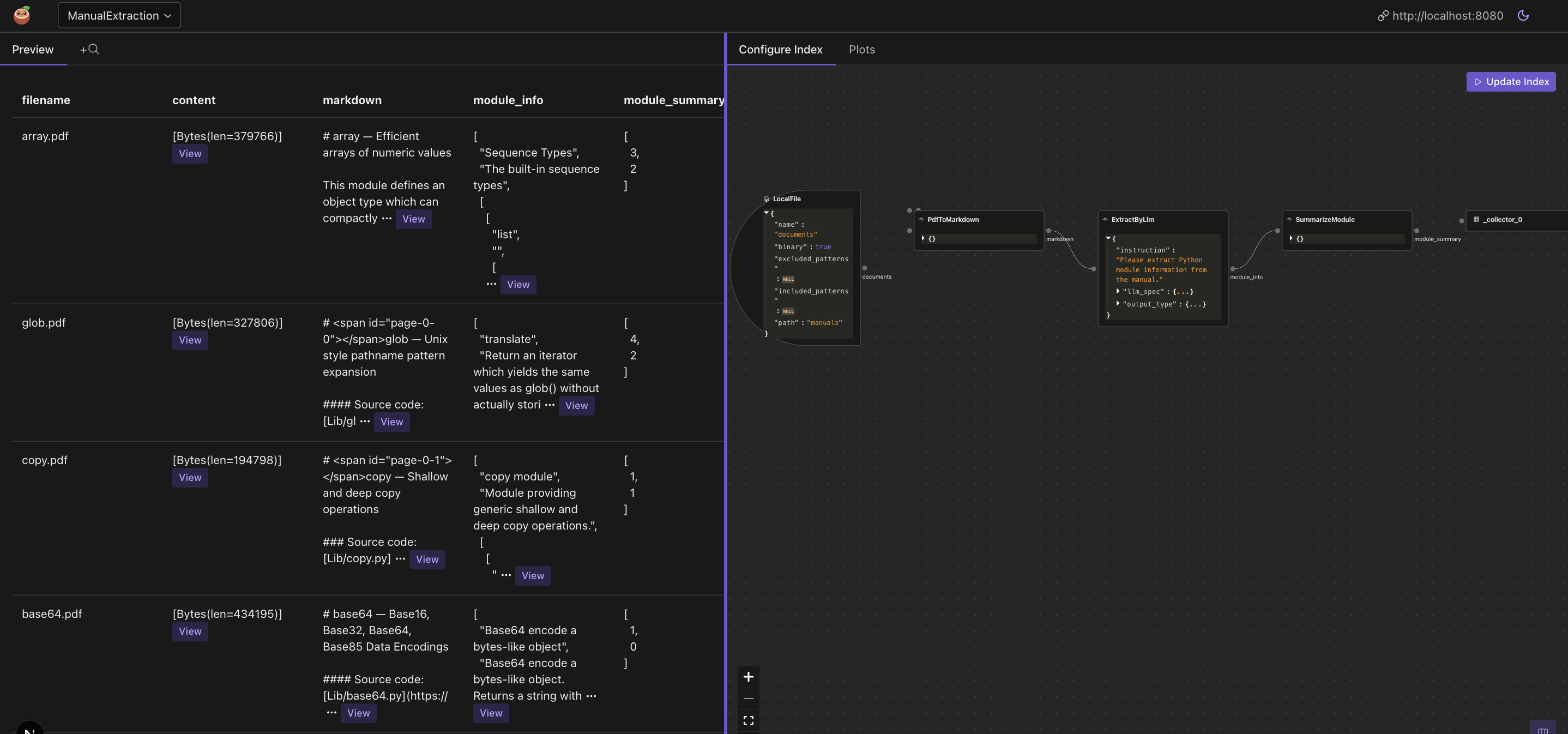

CocoInsight is a tool to help you understand your data pipeline and data index. CocoInsight is in Early Access now (Free) 😊 You found us! A quick 3 minute video tutorial about CocoInsight: Watch on YouTube.

Run CocoInsight to understand your RAG data pipeline:

python main.py cocoindex server -c https://cocoindex.io

Then open the CocoInsight UI at https://cocoindex.io/cocoinsight. It connects to your local CocoIndex server with zero data retention.

You can view the pipeline flow and the data preview in the CocoInsight UI: