You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Copy file name to clipboardExpand all lines: docs/docs/about/community.md

+1-1

Original file line number

Diff line number

Diff line change

@@ -7,7 +7,7 @@ description: Join the CocoIndex community

7

7

8

8

Welcome with a huge coconut hug 🥥⋆。˚🤗.

9

9

10

-

We are super excited for community contributions of all kinds - whether it's code improvements, documentation updates, issue reports, feature requests on [GitHub](https://github.com/cocoIndex/cocoindex), and discussions in our [Discord](https://discord.com/invite/zpA9S2DR7s).

10

+

We are super excited for community contributions of all kinds - whether it's code improvements, documentation updates, issue reports, feature requests on [GitHub](https://github.com/cocoindex-io/cocoindex), and discussions in our [Discord](https://discord.com/invite/zpA9S2DR7s).

11

11

12

12

We would love to fostering an inclusive, welcoming, and supportive environment. Contributing to CocoIndex should feel collaborative, friendly and enjoyable for everyone. Together, we can build better AI applications through robust data infrastructure.

Copy file name to clipboardExpand all lines: docs/docs/about/contributing.md

+1-1

Original file line number

Diff line number

Diff line change

@@ -36,7 +36,7 @@ We love contributions from our community! This guide explains how to get involve

36

36

37

37

To submit your code:

38

38

39

-

1. Fork the [CocoIndex repository](https://github.com/cocoIndex/cocoindex)

39

+

1. Fork the [CocoIndex repository](https://github.com/cocoindex-io/cocoindex)

40

40

2. [Create a new branch](https://docs.github.com/en/desktop/making-changes-in-a-branch/managing-branches-in-github-desktop) on your fork

41

41

3. Make your changes

42

42

4. [Open a Pull Request (PR)](https://docs.github.com/en/pull-requests/collaborating-with-pull-requests/proposing-changes-to-your-work-with-pull-requests/creating-a-pull-request-from-a-fork) when your work is ready for review

Copy file name to clipboardExpand all lines: docs/docs/core/cli.mdx

+1

Original file line number

Diff line number

Diff line change

@@ -65,6 +65,7 @@ The following subcommands are available:

65

65

|`setup`| Check and apply setup changes for flows, including the internal and target storage (to export). |

66

66

|`show`| Show the spec for a specific flow. |

67

67

|`update`| Update the index defined by the flow. |

68

+

|`evaluate`| Evaluate the flow and dump flow outputs to files. Instead of updating the index, it dumps what should be indexed to files. Mainly used for evaluation purpose. |

68

69

69

70

Use `--help` to see the full list of subcommands, and `subcommand --help` to see the usage of a specific one.

Copy file name to clipboardExpand all lines: docs/docs/getting_started/quickstart.md

+1-1

Original file line number

Diff line number

Diff line change

@@ -217,6 +217,6 @@ It will ask you to enter a query and it will return the top 10 results.

217

217

Next, you may want to:

218

218

219

219

* Learn about [CocoIndex Basics](../core/basics.md).

220

-

* Learn about other examples in the [examples](https://github.com/cocoIndex/cocoindex/tree/main/examples) directory.

220

+

* Learn about other examples in the [examples](https://github.com/cocoindex-io/cocoindex/tree/main/examples) directory.

221

221

* The `text_embedding` example is this quickstart with some polishing (loading environment variables from `.env` file, extract pieces shared by the indexing flow and query handler into a function).

222

222

* Pick other examples to learn upon your interest.

Copy file name to clipboardExpand all lines: docs/docs/ops/functions.md

+11

Original file line number

Diff line number

Diff line change

@@ -49,6 +49,17 @@ Return type: `vector[float32; N]`, where `N` is determined by the model

49

49

*`output_type` (type: `type`, required): The type of the output. e.g. a dataclass type name. See [Data Types](/docs/core/data_types) for all supported data types. The LLM will output values that match the schema of the type.

50

50

*`instruction` (type: `str`, optional): Additional instruction for the LLM.

51

51

52

+

:::tip Clear type definitions

53

+

54

+

Definitions of the `output_type` is fed into LLM as guidance to generate the output.

55

+

To improve the quality of the extracted information, giving clear definitions for your dataclasses is especially important, e.g.

56

+

57

+

* Provide readable field names for your dataclasses.

58

+

* Provide reasonable docstrings for your dataclasses.

59

+

* For any optional fields, clearly annotate that they are optional, by `SomeType | None` or `typing.Optional[SomeType]`.

60

+

61

+

:::

62

+

52

63

Input data:

53

64

54

65

*`text` (type: `str`, required): The text to extract information from.

Copy file name to clipboardExpand all lines: examples/code_embedding/README.md

+11-1

Original file line number

Diff line number

Diff line change

@@ -1,4 +1,14 @@

1

-

Simple example for cocoindex: build embedding index based on local files.

1

+

# Build embedding index for codebase

2

+

3

+

4

+

5

+

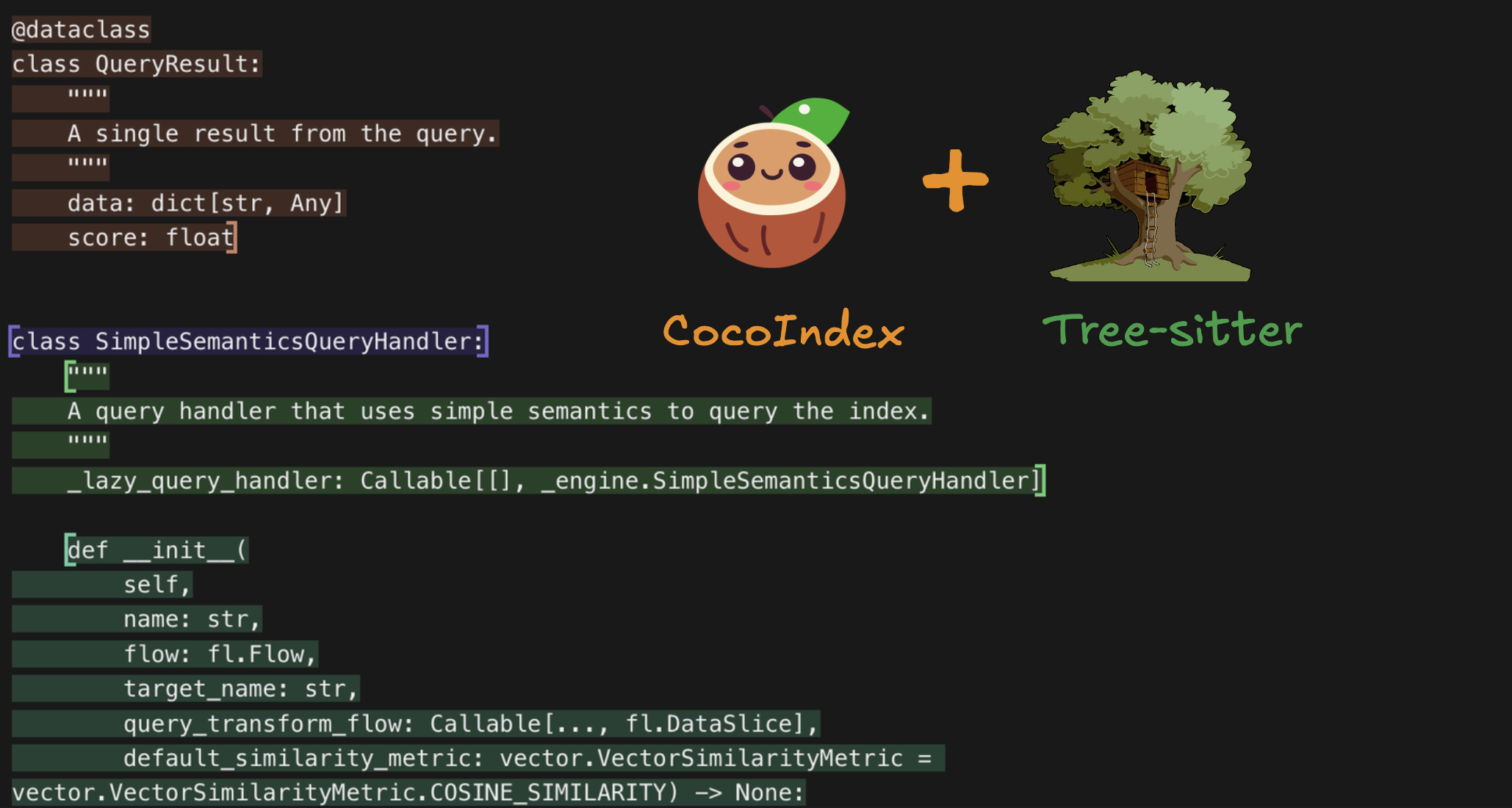

In this example, we will build an embedding index for a codebase using CocoIndex. CocoIndex provides built-in support for code base chunking, with native Tree-sitter support. [Tree-sitter](https://en.wikipedia.org/wiki/Tree-sitter_%28parser_generator%29) is a parser generator tool and an incremental parsing library, it is available in Rust 🦀 - [GitHub](https://github.com/tree-sitter/tree-sitter). CocoIndex has built-in Rust integration with Tree-sitter to efficiently parse code and extract syntax trees for various programming languages.

6

+

7

+

8

+

Please give [Cocoindex on Github](https://github.com/cocoindex-io/cocoindex) a star to support us if you like our work. Thank you so much with a warm coconut hug 🥥🤗. [](https://github.com/cocoindex-io/cocoindex)

9

+

10

+

You can find a detailed blog post with step by step tutorial and explanations [here](https://cocoindex.io/blogs/index-code-base-for-rag).

11

+

2

12

3

13

## Prerequisite

4

14

[Install Postgres](https://cocoindex.io/docs/getting_started/installation#-install-postgres) if you don't have one.

0 commit comments