-

Notifications

You must be signed in to change notification settings - Fork 1.9k

Convolutional net gives different results under ML.NET and Keras/TensorFlow #2022

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

I did some more investigation on this. First, I focused on the Next, I tried reconstructing the bitmap after the var stage3 = stage2.Append(new ImagePixelExtractingEstimator(env,

new ImagePixelExtractorTransform.ColumnInfo(

"ImageResized", Areas.InputLayerName,

ImagePixelExtractorTransform.ColorBits.Rgb, interleave: true,

scale: 1 / 255f)));

var vector = (VBuffer<float>) stage3.Preview(data).RowView.First().Values.Last().Value;

var reconstruction = new Bitmap(400, 400);

var floats = vector.DenseValues().ToList();

var arrayPointer = 0;

for (var col = 0; col < 400; ++col)

{

for (var row = 0; row < 400; ++row)

{

reconstruction.SetPixel(col, row,

Color.FromArgb((int)(floats[arrayPointer++] * 255), (int)(floats[arrayPointer++] * 255),

(int)(floats[arrayPointer++] * 255)));

}

}

reconstruction.Save("reconstructed.jpg", ImageFormat.Jpeg);This gave me back pretty much the same image as was the original input, no problems there. I even ran the Keras model on this reconstructed image and it gave me correct answers, while the ML.NET run produced the wrong output again. Note that the reconstruction fills the bitmap by columns. This is because, as I noticed while reading the source for ImagePixelExtractorTransform, the extractor fills the vector COLUMN FIRST. (I'm using This was interesting, so I went and checked what the Keras' Keras code and result: img_array = img_to_array(image)

img_array = img_array

print(img_array.reshape((480000,)))

# produces

# [195. 198. 155. 215. 218. 175. 201. 204. 159. 191. ... 48. 91. 100. 97. 98. 107. 104. 93. 102. 99.]This corresponds directly to pixel color values Compare that to what ML.NET does: var vector = (VBuffer<float>) stage3.Preview(data).RowView.First().Values.Last().Value;

var floats = vector.DenseValues().ToList();

// produces

// 195 198 155 191 193 154 179 180 149 208This corresponds to pixel values This leads me to conclude that ML.NET is feeding the image into TensorFlow rotated by 90 degrees, leading to wrong results (obviously). To confirm the hypothesis, I went back to the image, flipped it horizontally and rotated it 90 degrees counter-clockwise - this means what was originally a row was now a column. Feeding this flip-rotated image into the ML.NET code, without any other changes, gave me back CORRECT results: There are some small differences but I attribute those to GPU vs. CPU precision differences. Good enough. So the bottom line here is the problem is either on my side (training on row-based data when it should have been column-based data) or in the At any rate, I now have, if not a solution, a workaround at least. Additionally, the issue still remains that |

|

Hi @mareklinka , thanks for opening this issue and for the detailed explanation of your investigation. |

|

Thanks, @yaeldekel . Speaking of the resize transform, would it be possible to add a third resize mode to it that would not preserve aspect ratio and instead squeezed/expanded the image into the new dimensions? Something like It could be achieved by using something like this SO answer. This even looks like something I could take a look at implementing, if deemed acceptable. |

|

Hi @mareklinka, would it be possible for you to share one of the 400x400 images that gave you an exception? I have tried reproducing this exception with a few images that I have on my machine, but haven't been successful. |

|

No problem, @yaeldekel. I reproduced this as a unit test project - just hit One test resizes from full-size image to 400x400. This passes. Full stack: |

|

Hi @mareklinka , I still could not reproduce this error using the latest ML.NET, I think it might have something to do with this commit: 284e02c#diff-b0cd912107c73a762b5a5b0e99942387L486 |

|

You are right, @yaeldekel, the exception does not occur using the 0.9 nuget packages. Thanks for the investigation! |

|

If I understand things correctly, #2130 should resolve the issue with |

|

Ah, there is one more point to this issue - the resize mode. Having the |

|

@mareklinka Any chance you willing to add Fill mode to transform? It shouldn't be super hard, main code which do resizing is here:

Basically all you need to do is set destX and destY to desired values for new enum type. Most of us quite busy with API and documentation before 1.0 release, so chance to implement any feature request is quite small. |

|

@Ivanidzo4ka Of course, I can take a crack at it. As you said, it shouldn't be too hard. |

System information

.NET Core SDK (reflecting any global.json):

Version: 2.2.100

Commit: b9f2fa0ca8

Runtime Environment:

OS Name: Windows

OS Version: 10.0.17763

OS Platform: Windows

RID: win10-x64

Base Path: C:\Program Files\dotnet\sdk\2.2.100\

Host (useful for support):

Version: 2.2.0

Commit: 1249f08fed

.NET Core SDKs installed:

2.1.202 [C:\Program Files\dotnet\sdk]

2.1.500 [C:\Program Files\dotnet\sdk]

2.1.502 [C:\Program Files\dotnet\sdk]

2.2.100 [C:\Program Files\dotnet\sdk]

.NET Core runtimes installed:

Microsoft.AspNetCore.All 2.1.6 [C:\Program Files\dotnet\shared\Microsoft.AspNetCore.All]

Microsoft.AspNetCore.All 2.2.0 [C:\Program Files\dotnet\shared\Microsoft.AspNetCore.All]

Microsoft.AspNetCore.App 2.1.6 [C:\Program Files\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.AspNetCore.App 2.2.0 [C:\Program Files\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.NETCore.App 2.0.9 [C:\Program Files\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 2.1.6 [C:\Program Files\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 2.2.0 [C:\Program Files\dotnet\shared\Microsoft.NETCore.App]

Issue

I'm facing an issue while trying to score my Keras-trained, TensorFlow-backed model using ML.NET 0.8.

The ML task

This is a rather small convolutional neural net that inputs an image and attempts to identify two areas of interest in the image. We are attempting to read some data off a hardware device.

The model

We have a trained Keras model (.5h) and we converted it into a frozen TensorFlow model (.pb). The net contains 4 convolutional layers, 1 dense layer (the output), and uses ReLU activations. The output of the model is an array of 8 numbers: [X1_1, Y1_1, X2_1, Y2_1, X2_1, Y2_1, X2_2, Y2_2]. These are the top-left and bottom-right corners of the two areas of interest.

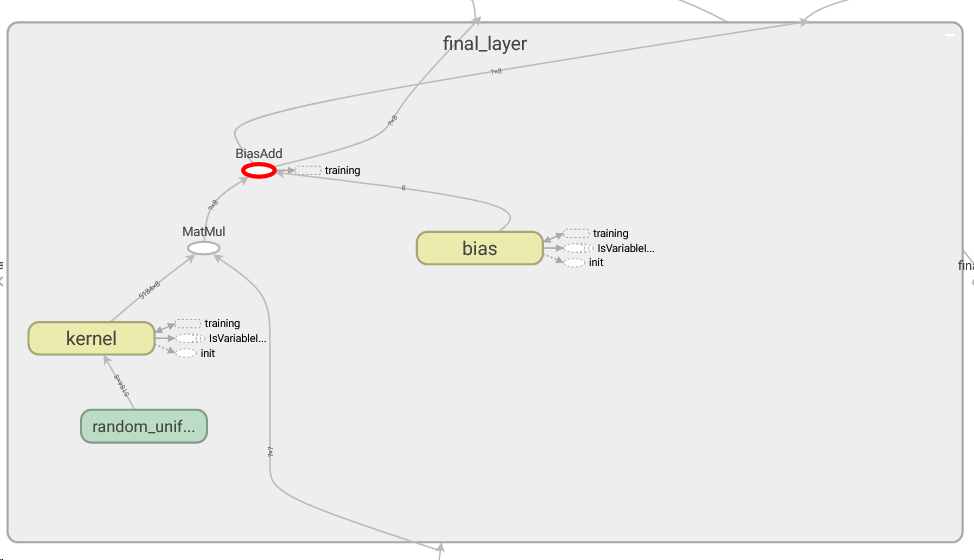

Netron diagram:

This model works very well for our use case when we execute it using Keras.

the ML.NET scoring setup

Since our main application is written in C#, I tried to use ML.NET to access the predictions from C# code. The pipeline is setup like this:

On the surface, we do the same thing as with Keras:

Then we make a predictor function from this pipeline and use that to score examples.

The issue

There are actually two issues with this. One is a problem with interoperability - the

ImageResizingEstimatoris doing something very different than Keras'load_img:load_img(x, target_size=(400,400), color_mode="rgb")loads the image in RGB and resizes it to 400x400. The image ends up "squeezed" into a squareImageResizingEstimator to 400x400either pads or crops the source image to be 400x400. The result of this operation is therefore very different.ArgumentException- the resulting bitmap is invalid when it gets to theImagePixelExtractingEstimatorstage.This is mainly an issue with documentation, I guess - it would be very helpful to declare this behavior explicitly, especially for interoperability scenarios such as this. At any rate, while realizing this, we went and resized our training images to 401x401 (to bypass the third bullet point issue) and retrained the model.

Our theory was that will both allow the resizer to do its job without exception and will avoid the difference in behavior of the resize behavior - since resizing from 401x401 to 400x400 does not change the bitmap too much. So the expectation was that feeding the same 401x401 image to Keras and ML.NET should produce the same predictions.

This did not happen. The ML.NET code seems to be working and produces predictions, however these predictions are way off of the Keras results (the Keras/TF results are actually correct which we proved by drawing the resulting coordinates on the images).

So the problem here is: is our TF setup wrong? Or is this some underlying difference in behavior which we can't see? I'm attaching a repro code as well. The ML.NET part is a .NET Core 2.2 project. To run the Keras part, you'll need the whole Keras stack installed (Keras + Tensorflow). The models and some sample images are included as well.

Sample output demonstrating the issue:

ML.NET predictions for three images:

78,151,124,196,157,152,208,195

83,160,131,208,167,162,220,207

82,161,136,212,174,163,229,211

Keras output for the same three images:

[96, 182, 166, 247, 218, 179, 291, 247]

[97, 186, 169, 253, 222, 182, 297, 252]

[99, 252, 172, 314, 225, 249, 294, 311]

Some additional details:

We tested this both against TensorFlow and Tensorflow-GPU, the issue persists. Therefore it's not a problem of running on GPU vs CPU. One possible explanation would be that I simply froze the TF graph wrong by specifying an incorrect final node, but I'm pretty sure TensorBoard is telling me that

final_layer/BiasAddis my final node:Any help with tracing this down would be greatly appreciated - operationalizing a TF model directly from C# is an awesome capability to have.

Source

MLNetConvNetRepro.zip

The text was updated successfully, but these errors were encountered: