diff --git a/docs/source/en/_toctree.yml b/docs/source/en/_toctree.yml

index 752219b4abd1..ba038486f21b 100644

--- a/docs/source/en/_toctree.yml

+++ b/docs/source/en/_toctree.yml

@@ -89,6 +89,8 @@

title: Kandinsky

- local: using-diffusers/ip_adapter

title: IP-Adapter

+ - local: using-diffusers/omnigen

+ title: OmniGen

- local: using-diffusers/pag

title: PAG

- local: using-diffusers/controlnet

@@ -292,6 +294,8 @@

title: LTXVideoTransformer3DModel

- local: api/models/mochi_transformer3d

title: MochiTransformer3DModel

+ - local: api/models/omnigen_transformer

+ title: OmniGenTransformer2DModel

- local: api/models/pixart_transformer2d

title: PixArtTransformer2DModel

- local: api/models/prior_transformer

@@ -448,6 +452,8 @@

title: MultiDiffusion

- local: api/pipelines/musicldm

title: MusicLDM

+ - local: api/pipelines/omnigen

+ title: OmniGen

- local: api/pipelines/pag

title: PAG

- local: api/pipelines/paint_by_example

diff --git a/docs/source/en/api/models/omnigen_transformer.md b/docs/source/en/api/models/omnigen_transformer.md

new file mode 100644

index 000000000000..ee700a04bdae

--- /dev/null

+++ b/docs/source/en/api/models/omnigen_transformer.md

@@ -0,0 +1,19 @@

+

+

+# OmniGenTransformer2DModel

+

+A Transformer model that accepts multimodal instructions to generate images for [OmniGen](https://github.com/VectorSpaceLab/OmniGen/).

+

+## OmniGenTransformer2DModel

+

+[[autodoc]] OmniGenTransformer2DModel

diff --git a/docs/source/en/api/pipelines/omnigen.md b/docs/source/en/api/pipelines/omnigen.md

new file mode 100644

index 000000000000..0b826f182edd

--- /dev/null

+++ b/docs/source/en/api/pipelines/omnigen.md

@@ -0,0 +1,106 @@

+

+

+# OmniGen

+

+[OmniGen: Unified Image Generation](https://arxiv.org/pdf/2409.11340) from BAAI, by Shitao Xiao, Yueze Wang, Junjie Zhou, Huaying Yuan, Xingrun Xing, Ruiran Yan, Chaofan Li, Shuting Wang, Tiejun Huang, Zheng Liu.

+

+The abstract from the paper is:

+

+*The emergence of Large Language Models (LLMs) has unified language

+generation tasks and revolutionized human-machine interaction.

+However, in the realm of image generation, a unified model capable of handling various tasks

+within a single framework remains largely unexplored. In

+this work, we introduce OmniGen, a new diffusion model

+for unified image generation. OmniGen is characterized

+by the following features: 1) Unification: OmniGen not

+only demonstrates text-to-image generation capabilities but

+also inherently supports various downstream tasks, such

+as image editing, subject-driven generation, and visual conditional generation. 2) Simplicity: The architecture of

+OmniGen is highly simplified, eliminating the need for additional plugins. Moreover, compared to existing diffusion

+models, it is more user-friendly and can complete complex

+tasks end-to-end through instructions without the need for

+extra intermediate steps, greatly simplifying the image generation workflow. 3) Knowledge Transfer: Benefit from

+learning in a unified format, OmniGen effectively transfers

+knowledge across different tasks, manages unseen tasks and

+domains, and exhibits novel capabilities. We also explore

+the model’s reasoning capabilities and potential applications of the chain-of-thought mechanism.

+This work represents the first attempt at a general-purpose image generation model,

+and we will release our resources at https:

+//github.com/VectorSpaceLab/OmniGen to foster future advancements.*

+

+

+

+Make sure to check out the Schedulers [guide](../../using-diffusers/schedulers.md) to learn how to explore the tradeoff between scheduler speed and quality, and see the [reuse components across pipelines](../../using-diffusers/loading.md#reuse-a-pipeline) section to learn how to efficiently load the same components into multiple pipelines.

+

+

+

+This pipeline was contributed by [staoxiao](https://github.com/staoxiao). The original codebase can be found [here](https://github.com/VectorSpaceLab/OmniGen). The original weights can be found under [hf.co/shitao](https://huggingface.co/Shitao/OmniGen-v1).

+

+

+## Inference

+

+First, load the pipeline:

+

+```python

+import torch

+from diffusers import OmniGenPipeline

+pipe = OmniGenPipeline.from_pretrained(

+ "Shitao/OmniGen-v1-diffusers",

+ torch_dtype=torch.bfloat16

+)

+pipe.to("cuda")

+```

+

+For text-to-image, pass a text prompt. By default, OmniGen generates a 1024x1024 image.

+You can try setting the `height` and `width` parameters to generate images with different size.

+

+```py

+prompt = "Realistic photo. A young woman sits on a sofa, holding a book and facing the camera. She wears delicate silver hoop earrings adorned with tiny, sparkling diamonds that catch the light, with her long chestnut hair cascading over her shoulders. Her eyes are focused and gentle, framed by long, dark lashes. She is dressed in a cozy cream sweater, which complements her warm, inviting smile. Behind her, there is a table with a cup of water in a sleek, minimalist blue mug. The background is a serene indoor setting with soft natural light filtering through a window, adorned with tasteful art and flowers, creating a cozy and peaceful ambiance. 4K, HD."

+image = pipe(

+ prompt=prompt,

+ height=1024,

+ width=1024,

+ guidance_scale=3,

+ generator=torch.Generator(device="cpu").manual_seed(111),

+).images[0]

+image

+```

+

+OmniGen supports multimodal inputs.

+When the input includes an image, you need to add a placeholder `![]() <|image_1|>` in the text prompt to represent the image.

+It is recommended to enable `use_input_image_size_as_output` to keep the edited image the same size as the original image.

+

+```py

+prompt="

<|image_1|>` in the text prompt to represent the image.

+It is recommended to enable `use_input_image_size_as_output` to keep the edited image the same size as the original image.

+

+```py

+prompt="![]() <|image_1|> Remove the woman's earrings. Replace the mug with a clear glass filled with sparkling iced cola."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/t2i_woman_with_book.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(222)).images[0]

+image

+```

+

+

+## OmniGenPipeline

+

+[[autodoc]] OmniGenPipeline

+ - all

+ - __call__

+

+

diff --git a/docs/source/en/using-diffusers/omnigen.md b/docs/source/en/using-diffusers/omnigen.md

new file mode 100644

index 000000000000..a3d98e4e60cc

--- /dev/null

+++ b/docs/source/en/using-diffusers/omnigen.md

@@ -0,0 +1,314 @@

+

+# OmniGen

+

+OmniGen is an image generation model. Unlike existing text-to-image models, OmniGen is a single model designed to handle a variety of tasks (e.g., text-to-image, image editing, controllable generation). It has the following features:

+- Minimalist model architecture, consisting of only a VAE and a transformer module, for joint modeling of text and images.

+- Support for multimodal inputs. It can process any text-image mixed data as instructions for image generation, rather than relying solely on text.

+

+For more information, please refer to the [paper](https://arxiv.org/pdf/2409.11340).

+This guide will walk you through using OmniGen for various tasks and use cases.

+

+## Load model checkpoints

+Model weights may be stored in separate subfolders on the Hub or locally, in which case, you should use the [`~DiffusionPipeline.from_pretrained`] method.

+

+```py

+import torch

+from diffusers import OmniGenPipeline

+pipe = OmniGenPipeline.from_pretrained(

+ "Shitao/OmniGen-v1-diffusers",

+ torch_dtype=torch.bfloat16

+)

+```

+

+

+

+## Text-to-image

+

+For text-to-image, pass a text prompt. By default, OmniGen generates a 1024x1024 image.

+You can try setting the `height` and `width` parameters to generate images with different size.

+

+```py

+import torch

+from diffusers import OmniGenPipeline

+

+pipe = OmniGenPipeline.from_pretrained(

+ "Shitao/OmniGen-v1-diffusers",

+ torch_dtype=torch.bfloat16

+)

+pipe.to("cuda")

+

+prompt = "Realistic photo. A young woman sits on a sofa, holding a book and facing the camera. She wears delicate silver hoop earrings adorned with tiny, sparkling diamonds that catch the light, with her long chestnut hair cascading over her shoulders. Her eyes are focused and gentle, framed by long, dark lashes. She is dressed in a cozy cream sweater, which complements her warm, inviting smile. Behind her, there is a table with a cup of water in a sleek, minimalist blue mug. The background is a serene indoor setting with soft natural light filtering through a window, adorned with tasteful art and flowers, creating a cozy and peaceful ambiance. 4K, HD."

+image = pipe(

+ prompt=prompt,

+ height=1024,

+ width=1024,

+ guidance_scale=3,

+ generator=torch.Generator(device="cpu").manual_seed(111),

+).images[0]

+image

+```

+

<|image_1|> Remove the woman's earrings. Replace the mug with a clear glass filled with sparkling iced cola."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/t2i_woman_with_book.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(222)).images[0]

+image

+```

+

+

+## OmniGenPipeline

+

+[[autodoc]] OmniGenPipeline

+ - all

+ - __call__

+

+

diff --git a/docs/source/en/using-diffusers/omnigen.md b/docs/source/en/using-diffusers/omnigen.md

new file mode 100644

index 000000000000..a3d98e4e60cc

--- /dev/null

+++ b/docs/source/en/using-diffusers/omnigen.md

@@ -0,0 +1,314 @@

+

+# OmniGen

+

+OmniGen is an image generation model. Unlike existing text-to-image models, OmniGen is a single model designed to handle a variety of tasks (e.g., text-to-image, image editing, controllable generation). It has the following features:

+- Minimalist model architecture, consisting of only a VAE and a transformer module, for joint modeling of text and images.

+- Support for multimodal inputs. It can process any text-image mixed data as instructions for image generation, rather than relying solely on text.

+

+For more information, please refer to the [paper](https://arxiv.org/pdf/2409.11340).

+This guide will walk you through using OmniGen for various tasks and use cases.

+

+## Load model checkpoints

+Model weights may be stored in separate subfolders on the Hub or locally, in which case, you should use the [`~DiffusionPipeline.from_pretrained`] method.

+

+```py

+import torch

+from diffusers import OmniGenPipeline

+pipe = OmniGenPipeline.from_pretrained(

+ "Shitao/OmniGen-v1-diffusers",

+ torch_dtype=torch.bfloat16

+)

+```

+

+

+

+## Text-to-image

+

+For text-to-image, pass a text prompt. By default, OmniGen generates a 1024x1024 image.

+You can try setting the `height` and `width` parameters to generate images with different size.

+

+```py

+import torch

+from diffusers import OmniGenPipeline

+

+pipe = OmniGenPipeline.from_pretrained(

+ "Shitao/OmniGen-v1-diffusers",

+ torch_dtype=torch.bfloat16

+)

+pipe.to("cuda")

+

+prompt = "Realistic photo. A young woman sits on a sofa, holding a book and facing the camera. She wears delicate silver hoop earrings adorned with tiny, sparkling diamonds that catch the light, with her long chestnut hair cascading over her shoulders. Her eyes are focused and gentle, framed by long, dark lashes. She is dressed in a cozy cream sweater, which complements her warm, inviting smile. Behind her, there is a table with a cup of water in a sleek, minimalist blue mug. The background is a serene indoor setting with soft natural light filtering through a window, adorned with tasteful art and flowers, creating a cozy and peaceful ambiance. 4K, HD."

+image = pipe(

+ prompt=prompt,

+ height=1024,

+ width=1024,

+ guidance_scale=3,

+ generator=torch.Generator(device="cpu").manual_seed(111),

+).images[0]

+image

+```

+

+

+

![]() <|image_1|>` in the text prompt to represent the image.

+It is recommended to enable `use_input_image_size_as_output` to keep the edited image the same size as the original image.

+

+```py

+import torch

+from diffusers import OmniGenPipeline

+from diffusers.utils import load_image

+

+pipe = OmniGenPipeline.from_pretrained(

+ "Shitao/OmniGen-v1-diffusers",

+ torch_dtype=torch.bfloat16

+)

+pipe.to("cuda")

+

+prompt="

<|image_1|>` in the text prompt to represent the image.

+It is recommended to enable `use_input_image_size_as_output` to keep the edited image the same size as the original image.

+

+```py

+import torch

+from diffusers import OmniGenPipeline

+from diffusers.utils import load_image

+

+pipe = OmniGenPipeline.from_pretrained(

+ "Shitao/OmniGen-v1-diffusers",

+ torch_dtype=torch.bfloat16

+)

+pipe.to("cuda")

+

+prompt="![]() <|image_1|> Remove the woman's earrings. Replace the mug with a clear glass filled with sparkling iced cola."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/t2i_woman_with_book.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(222)).images[0]

+image

+```

+

<|image_1|> Remove the woman's earrings. Replace the mug with a clear glass filled with sparkling iced cola."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/t2i_woman_with_book.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(222)).images[0]

+image

+```

+

+

+

+

original image

+

+

+

+

edited image

+

+

![]() <|image_1|>"

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/edit.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(0)).images[0]

+image

+```

+

<|image_1|>"

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/edit.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(0)).images[0]

+image

+```

+

+

+

![]() <|image_1|>"

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/edit.png")]

+image1 = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(333)).images[0]

+image1

+

+prompt="Generate a new photo using the following picture and text as conditions:

<|image_1|>"

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/edit.png")]

+image1 = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(333)).images[0]

+image1

+

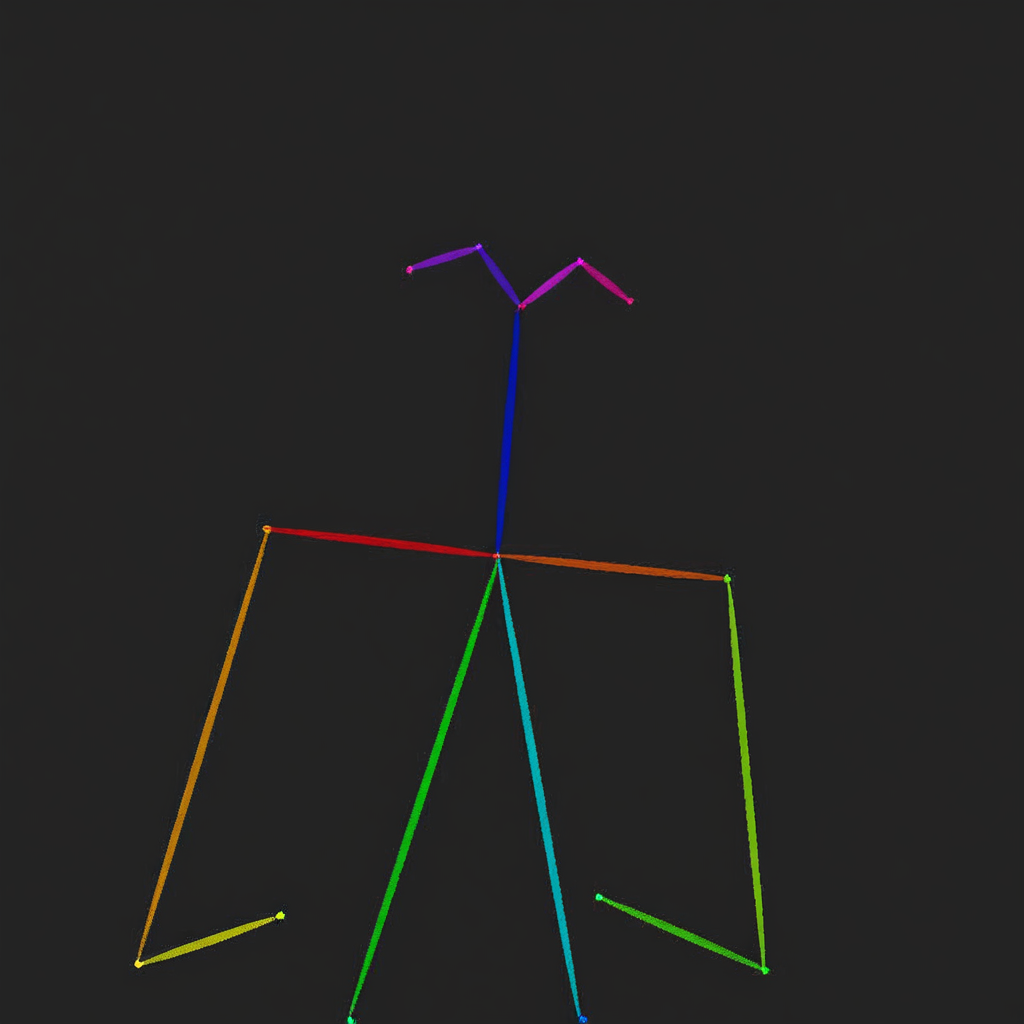

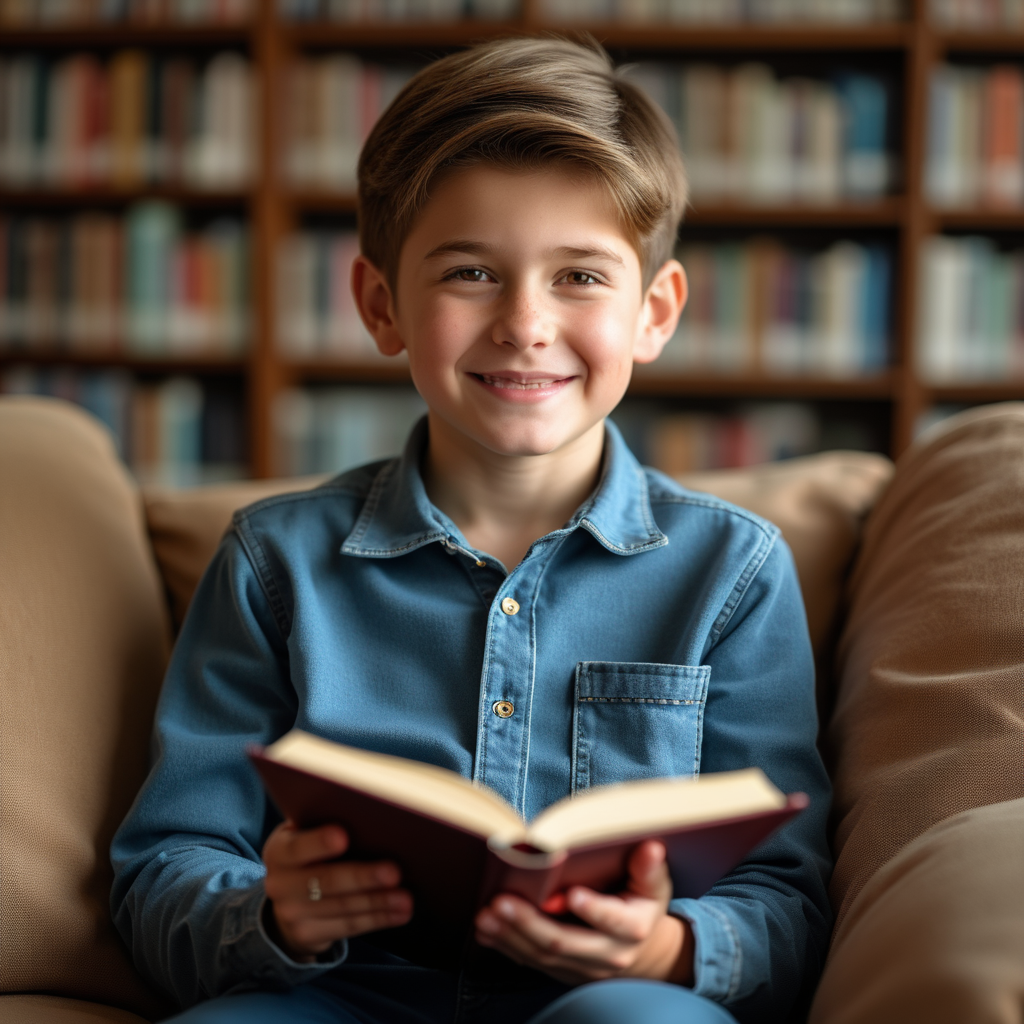

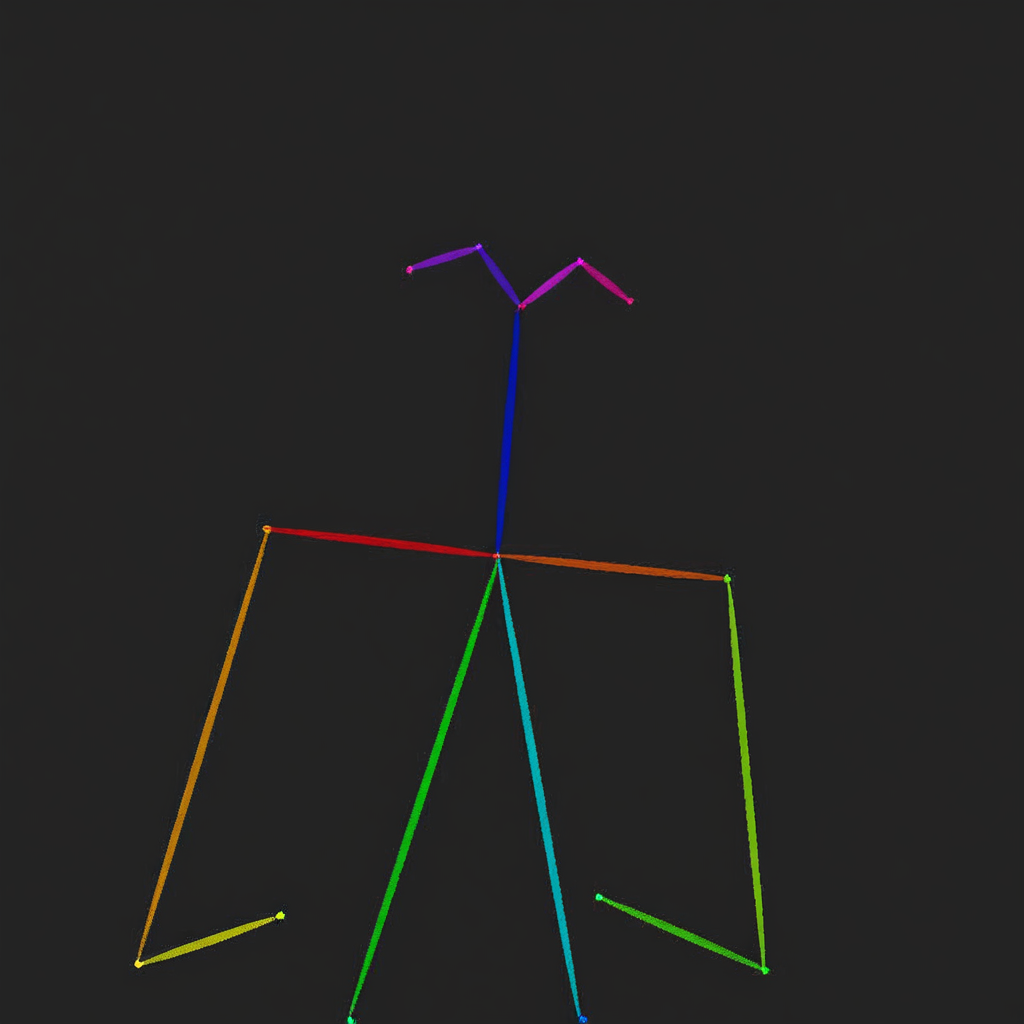

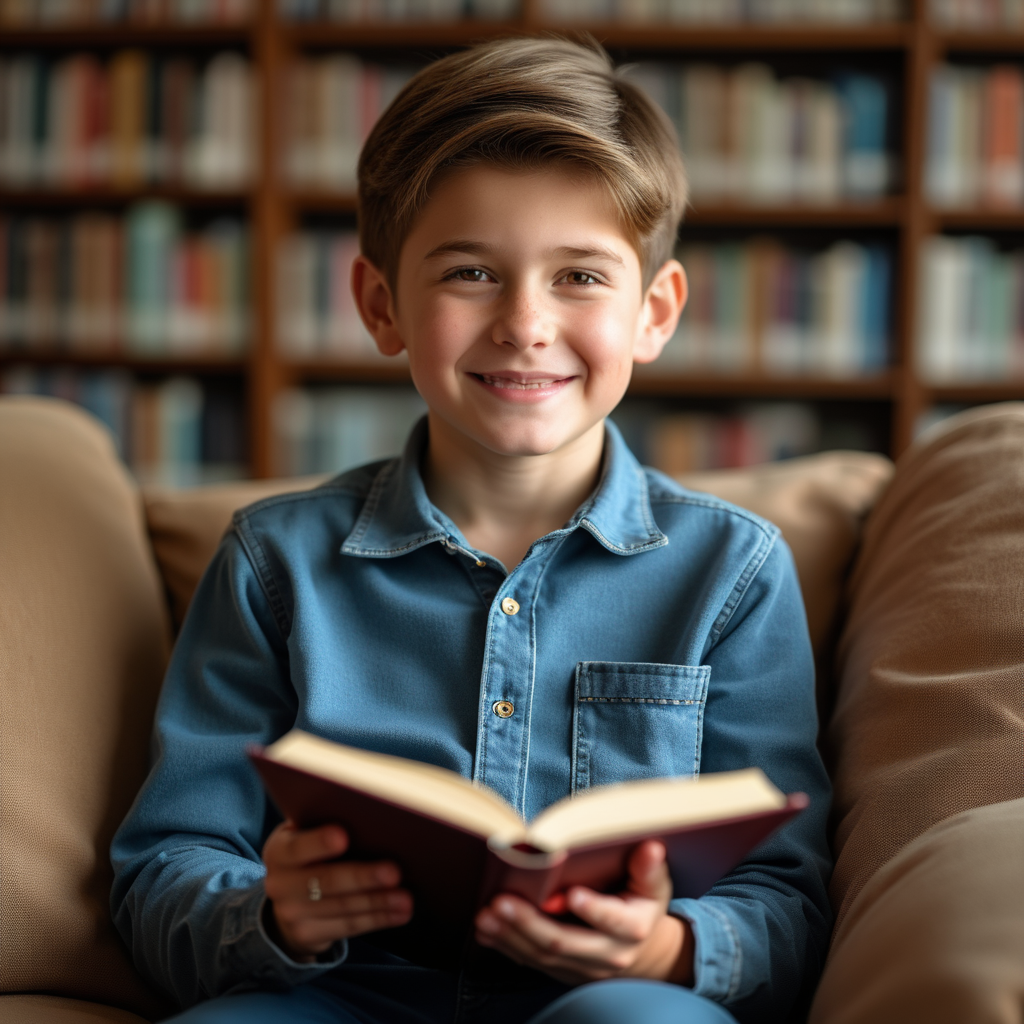

+prompt="Generate a new photo using the following picture and text as conditions: ![]() <|image_1|>\n A young boy is sitting on a sofa in the library, holding a book. His hair is neatly combed, and a faint smile plays on his lips, with a few freckles scattered across his cheeks. The library is quiet, with rows of shelves filled with books stretching out behind him."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/skeletal.png")]

+image2 = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(333)).images[0]

+image2

+```

+

+

<|image_1|>\n A young boy is sitting on a sofa in the library, holding a book. His hair is neatly combed, and a faint smile plays on his lips, with a few freckles scattered across his cheeks. The library is quiet, with rows of shelves filled with books stretching out behind him."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/skeletal.png")]

+image2 = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(333)).images[0]

+image2

+```

+

+

+

+

+

original image

+

+

+

+

detected skeleton

+

+

+

+

skeleton to image

+

+

![]() <|image_1|>, generate a new photo: A young boy is sitting on a sofa in the library, holding a book. His hair is neatly combed, and a faint smile plays on his lips, with a few freckles scattered across his cheeks. The library is quiet, with rows of shelves filled with books stretching out behind him."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/edit.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(0)).images[0]

+image

+```

+

<|image_1|>, generate a new photo: A young boy is sitting on a sofa in the library, holding a book. His hair is neatly combed, and a faint smile plays on his lips, with a few freckles scattered across his cheeks. The library is quiet, with rows of shelves filled with books stretching out behind him."

+input_images=[load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/edit.png")]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ guidance_scale=2,

+ img_guidance_scale=1.6,

+ use_input_image_size_as_output=True,

+ generator=torch.Generator(device="cpu").manual_seed(0)).images[0]

+image

+```

+

+

+

+

generated image

+

+

![]() <|image_1|>. The woman is the woman on the left of

<|image_1|>. The woman is the woman on the left of ![]() <|image_2|>"

+input_image_1 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/3.png")

+input_image_2 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/4.png")

+input_images=[input_image_1, input_image_2]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ height=1024,

+ width=1024,

+ guidance_scale=2.5,

+ img_guidance_scale=1.6,

+ generator=torch.Generator(device="cpu").manual_seed(666)).images[0]

+image

+```

+

<|image_2|>"

+input_image_1 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/3.png")

+input_image_2 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/4.png")

+input_images=[input_image_1, input_image_2]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ height=1024,

+ width=1024,

+ guidance_scale=2.5,

+ img_guidance_scale=1.6,

+ generator=torch.Generator(device="cpu").manual_seed(666)).images[0]

+image

+```

+

+

+

+

input_image_1

+

+

+

+

input_image_2

+

+

+

+

generated image

+

+

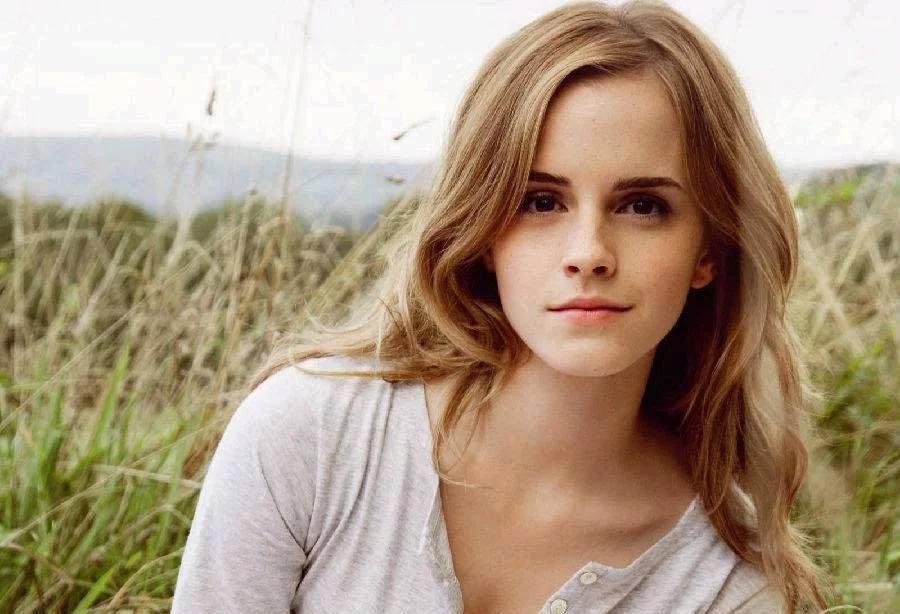

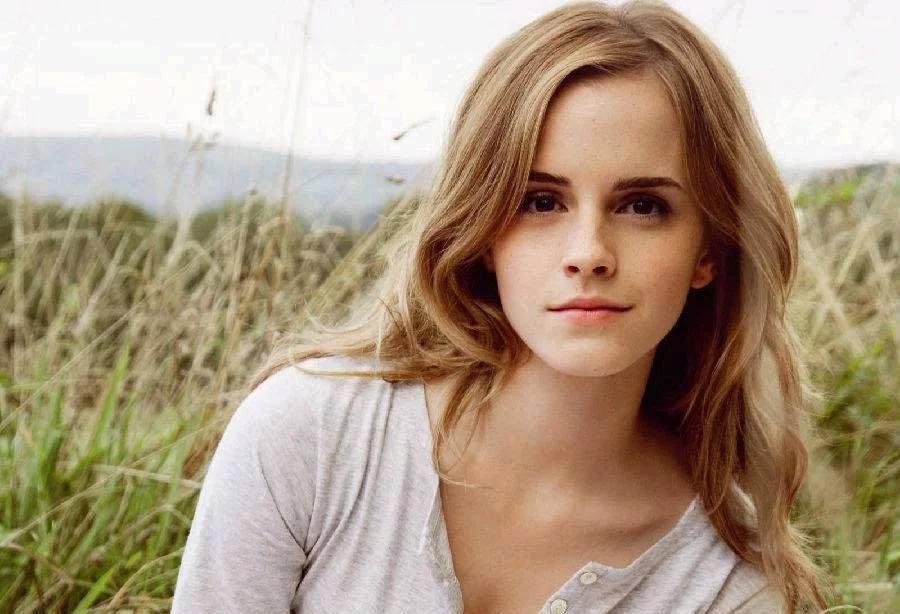

![]() <|image_1|>. The long-sleeve blouse and a pleated skirt are

<|image_1|>. The long-sleeve blouse and a pleated skirt are ![]() <|image_2|>."

+input_image_1 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/emma.jpeg")

+input_image_2 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/dress.jpg")

+input_images=[input_image_1, input_image_2]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ height=1024,

+ width=1024,

+ guidance_scale=2.5,

+ img_guidance_scale=1.6,

+ generator=torch.Generator(device="cpu").manual_seed(666)).images[0]

+image

+```

+

+

<|image_2|>."

+input_image_1 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/emma.jpeg")

+input_image_2 = load_image("https://raw.githubusercontent.com/VectorSpaceLab/OmniGen/main/imgs/docs_img/dress.jpg")

+input_images=[input_image_1, input_image_2]

+image = pipe(

+ prompt=prompt,

+ input_images=input_images,

+ height=1024,

+ width=1024,

+ guidance_scale=2.5,

+ img_guidance_scale=1.6,

+ generator=torch.Generator(device="cpu").manual_seed(666)).images[0]

+image

+```

+

+

+

+

+

person image

+

+

+

+

clothe image

+

+

+

+

generated image

+

+

![]() <|image_{k + 1}|>" in prompt[i] for k in range(len(input_images[i]))):

+ raise ValueError(

+ f"prompt `{prompt[i]}` doesn't have enough placeholders for the input images `{input_images[i]}`"

+ )

+

+ if height % (self.vae_scale_factor * 2) != 0 or width % (self.vae_scale_factor * 2) != 0:

+ logger.warning(

+ f"`height` and `width` have to be divisible by {self.vae_scale_factor * 2} but are {height} and {width}. Dimensions will be resized accordingly"

+ )

+

+ if use_input_image_size_as_output:

+ if input_images is None or input_images[0] is None:

+ raise ValueError(

+ "`use_input_image_size_as_output` is set to True, but no input image was found. If you are performing a text-to-image task, please set it to False."

+ )

+

+ if callback_on_step_end_tensor_inputs is not None and not all(

+ k in self._callback_tensor_inputs for k in callback_on_step_end_tensor_inputs

+ ):

+ raise ValueError(

+ f"`callback_on_step_end_tensor_inputs` has to be in {self._callback_tensor_inputs}, but found {[k for k in callback_on_step_end_tensor_inputs if k not in self._callback_tensor_inputs]}"

+ )

+

+ def enable_vae_slicing(self):

+ r"""

+ Enable sliced VAE decoding. When this option is enabled, the VAE will split the input tensor in slices to

+ compute decoding in several steps. This is useful to save some memory and allow larger batch sizes.

+ """

+ self.vae.enable_slicing()

+

+ def disable_vae_slicing(self):

+ r"""

+ Disable sliced VAE decoding. If `enable_vae_slicing` was previously enabled, this method will go back to

+ computing decoding in one step.

+ """

+ self.vae.disable_slicing()

+

+ def enable_vae_tiling(self):

+ r"""

+ Enable tiled VAE decoding. When this option is enabled, the VAE will split the input tensor into tiles to

+ compute decoding and encoding in several steps. This is useful for saving a large amount of memory and to allow

+ processing larger images.

+ """

+ self.vae.enable_tiling()

+

+ def disable_vae_tiling(self):

+ r"""

+ Disable tiled VAE decoding. If `enable_vae_tiling` was previously enabled, this method will go back to

+ computing decoding in one step.

+ """

+ self.vae.disable_tiling()

+

+ # Copied from diffusers.pipelines.stable_diffusion_3.pipeline_stable_diffusion_3.StableDiffusion3Pipeline.prepare_latents

+ def prepare_latents(

+ self,

+ batch_size,

+ num_channels_latents,

+ height,

+ width,

+ dtype,

+ device,

+ generator,

+ latents=None,

+ ):

+ if latents is not None:

+ return latents.to(device=device, dtype=dtype)

+

+ shape = (

+ batch_size,

+ num_channels_latents,

+ int(height) // self.vae_scale_factor,

+ int(width) // self.vae_scale_factor,

+ )

+

+ if isinstance(generator, list) and len(generator) != batch_size:

+ raise ValueError(

+ f"You have passed a list of generators of length {len(generator)}, but requested an effective batch"

+ f" size of {batch_size}. Make sure the batch size matches the length of the generators."

+ )

+

+ latents = randn_tensor(shape, generator=generator, device=device, dtype=dtype)

+

+ return latents

+

+ @property

+ def guidance_scale(self):

+ return self._guidance_scale

+

+ @property

+ def num_timesteps(self):

+ return self._num_timesteps

+

+ @property

+ def interrupt(self):

+ return self._interrupt

+

+ @torch.no_grad()

+ @replace_example_docstring(EXAMPLE_DOC_STRING)

+ def __call__(

+ self,

+ prompt: Union[str, List[str]],

+ input_images: Union[PipelineImageInput, List[PipelineImageInput]] = None,

+ height: Optional[int] = None,

+ width: Optional[int] = None,

+ num_inference_steps: int = 50,

+ max_input_image_size: int = 1024,

+ timesteps: List[int] = None,

+ guidance_scale: float = 2.5,

+ img_guidance_scale: float = 1.6,

+ use_input_image_size_as_output: bool = False,

+ num_images_per_prompt: Optional[int] = 1,

+ generator: Optional[Union[torch.Generator, List[torch.Generator]]] = None,

+ latents: Optional[torch.Tensor] = None,

+ output_type: Optional[str] = "pil",

+ return_dict: bool = True,

+ attention_kwargs: Optional[Dict[str, Any]] = None,

+ callback_on_step_end: Optional[Callable[[int, int, Dict], None]] = None,

+ callback_on_step_end_tensor_inputs: List[str] = ["latents"],

+ max_sequence_length: int = 120000,

+ ):

+ r"""

+ Function invoked when calling the pipeline for generation.

+

+ Args:

+ prompt (`str` or `List[str]`, *optional*):

+ The prompt or prompts to guide the image generation. If the input includes images, need to add

+ placeholders `

<|image_{k + 1}|>" in prompt[i] for k in range(len(input_images[i]))):

+ raise ValueError(

+ f"prompt `{prompt[i]}` doesn't have enough placeholders for the input images `{input_images[i]}`"

+ )

+

+ if height % (self.vae_scale_factor * 2) != 0 or width % (self.vae_scale_factor * 2) != 0:

+ logger.warning(

+ f"`height` and `width` have to be divisible by {self.vae_scale_factor * 2} but are {height} and {width}. Dimensions will be resized accordingly"

+ )

+

+ if use_input_image_size_as_output:

+ if input_images is None or input_images[0] is None:

+ raise ValueError(

+ "`use_input_image_size_as_output` is set to True, but no input image was found. If you are performing a text-to-image task, please set it to False."

+ )

+

+ if callback_on_step_end_tensor_inputs is not None and not all(

+ k in self._callback_tensor_inputs for k in callback_on_step_end_tensor_inputs

+ ):

+ raise ValueError(

+ f"`callback_on_step_end_tensor_inputs` has to be in {self._callback_tensor_inputs}, but found {[k for k in callback_on_step_end_tensor_inputs if k not in self._callback_tensor_inputs]}"

+ )

+

+ def enable_vae_slicing(self):

+ r"""

+ Enable sliced VAE decoding. When this option is enabled, the VAE will split the input tensor in slices to

+ compute decoding in several steps. This is useful to save some memory and allow larger batch sizes.

+ """

+ self.vae.enable_slicing()

+

+ def disable_vae_slicing(self):

+ r"""

+ Disable sliced VAE decoding. If `enable_vae_slicing` was previously enabled, this method will go back to

+ computing decoding in one step.

+ """

+ self.vae.disable_slicing()

+

+ def enable_vae_tiling(self):

+ r"""

+ Enable tiled VAE decoding. When this option is enabled, the VAE will split the input tensor into tiles to

+ compute decoding and encoding in several steps. This is useful for saving a large amount of memory and to allow

+ processing larger images.

+ """

+ self.vae.enable_tiling()

+

+ def disable_vae_tiling(self):

+ r"""

+ Disable tiled VAE decoding. If `enable_vae_tiling` was previously enabled, this method will go back to

+ computing decoding in one step.

+ """

+ self.vae.disable_tiling()

+

+ # Copied from diffusers.pipelines.stable_diffusion_3.pipeline_stable_diffusion_3.StableDiffusion3Pipeline.prepare_latents

+ def prepare_latents(

+ self,

+ batch_size,

+ num_channels_latents,

+ height,

+ width,

+ dtype,

+ device,

+ generator,

+ latents=None,

+ ):

+ if latents is not None:

+ return latents.to(device=device, dtype=dtype)

+

+ shape = (

+ batch_size,

+ num_channels_latents,

+ int(height) // self.vae_scale_factor,

+ int(width) // self.vae_scale_factor,

+ )

+

+ if isinstance(generator, list) and len(generator) != batch_size:

+ raise ValueError(

+ f"You have passed a list of generators of length {len(generator)}, but requested an effective batch"

+ f" size of {batch_size}. Make sure the batch size matches the length of the generators."

+ )

+

+ latents = randn_tensor(shape, generator=generator, device=device, dtype=dtype)

+

+ return latents

+

+ @property

+ def guidance_scale(self):

+ return self._guidance_scale

+

+ @property

+ def num_timesteps(self):

+ return self._num_timesteps

+

+ @property

+ def interrupt(self):

+ return self._interrupt

+

+ @torch.no_grad()

+ @replace_example_docstring(EXAMPLE_DOC_STRING)

+ def __call__(

+ self,

+ prompt: Union[str, List[str]],

+ input_images: Union[PipelineImageInput, List[PipelineImageInput]] = None,

+ height: Optional[int] = None,

+ width: Optional[int] = None,

+ num_inference_steps: int = 50,

+ max_input_image_size: int = 1024,

+ timesteps: List[int] = None,

+ guidance_scale: float = 2.5,

+ img_guidance_scale: float = 1.6,

+ use_input_image_size_as_output: bool = False,

+ num_images_per_prompt: Optional[int] = 1,

+ generator: Optional[Union[torch.Generator, List[torch.Generator]]] = None,

+ latents: Optional[torch.Tensor] = None,

+ output_type: Optional[str] = "pil",

+ return_dict: bool = True,

+ attention_kwargs: Optional[Dict[str, Any]] = None,

+ callback_on_step_end: Optional[Callable[[int, int, Dict], None]] = None,

+ callback_on_step_end_tensor_inputs: List[str] = ["latents"],

+ max_sequence_length: int = 120000,

+ ):

+ r"""

+ Function invoked when calling the pipeline for generation.

+

+ Args:

+ prompt (`str` or `List[str]`, *optional*):

+ The prompt or prompts to guide the image generation. If the input includes images, need to add

+ placeholders `![]() <|image_i|>` in the prompt to indicate the position of the i-th images.

+ input_images (`PipelineImageInput` or `List[PipelineImageInput]`, *optional*):

+ The list of input images. We will replace the "<|image_i|>" in prompt with the i-th image in list.

+ height (`int`, *optional*, defaults to self.unet.config.sample_size * self.vae_scale_factor):

+ The height in pixels of the generated image. This is set to 1024 by default for the best results.

+ width (`int`, *optional*, defaults to self.unet.config.sample_size * self.vae_scale_factor):

+ The width in pixels of the generated image. This is set to 1024 by default for the best results.

+ num_inference_steps (`int`, *optional*, defaults to 50):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ max_input_image_size (`int`, *optional*, defaults to 1024):

+ the maximum size of input image, which will be used to crop the input image to the maximum size

+ timesteps (`List[int]`, *optional*):

+ Custom timesteps to use for the denoising process with schedulers which support a `timesteps` argument

+ in their `set_timesteps` method. If not defined, the default behavior when `num_inference_steps` is

+ passed will be used. Must be in descending order.

+ guidance_scale (`float`, *optional*, defaults to 2.5):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ img_guidance_scale (`float`, *optional*, defaults to 1.6):

+ Defined as equation 3 in [Instrucpix2pix](https://arxiv.org/pdf/2211.09800).

+ use_input_image_size_as_output (bool, defaults to False):

+ whether to use the input image size as the output image size, which can be used for single-image input,

+ e.g., image editing task

+ num_images_per_prompt (`int`, *optional*, defaults to 1):

+ The number of images to generate per prompt.

+ generator (`torch.Generator` or `List[torch.Generator]`, *optional*):

+ One or a list of [torch generator(s)](https://pytorch.org/docs/stable/generated/torch.Generator.html)

+ to make generation deterministic.

+ latents (`torch.Tensor`, *optional*):

+ Pre-generated noisy latents, sampled from a Gaussian distribution, to be used as inputs for image

+ generation. Can be used to tweak the same generation with different prompts. If not provided, a latents

+ tensor will ge generated by sampling using the supplied random `generator`.

+ output_type (`str`, *optional*, defaults to `"pil"`):

+ The output format of the generate image. Choose between

+ [PIL](https://pillow.readthedocs.io/en/stable/): `PIL.Image.Image` or `np.array`.

+ return_dict (`bool`, *optional*, defaults to `True`):

+ Whether or not to return a [`~pipelines.flux.FluxPipelineOutput`] instead of a plain tuple.

+ attention_kwargs (`dict`, *optional*):

+ A kwargs dictionary that if specified is passed along to the `AttentionProcessor` as defined under

+ `self.processor` in

+ [diffusers.models.attention_processor](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention_processor.py).

+ callback_on_step_end (`Callable`, *optional*):

+ A function that calls at the end of each denoising steps during the inference. The function is called

+ with the following arguments: `callback_on_step_end(self: DiffusionPipeline, step: int, timestep: int,

+ callback_kwargs: Dict)`. `callback_kwargs` will include a list of all tensors as specified by

+ `callback_on_step_end_tensor_inputs`.

+ callback_on_step_end_tensor_inputs (`List`, *optional*):

+ The list of tensor inputs for the `callback_on_step_end` function. The tensors specified in the list

+ will be passed as `callback_kwargs` argument. You will only be able to include variables listed in the

+ `._callback_tensor_inputs` attribute of your pipeline class.

+ max_sequence_length (`int` defaults to 512): Maximum sequence length to use with the `prompt`.

+

+ Examples:

+

+ Returns: [`~pipelines.ImagePipelineOutput`] or `tuple`:

+ If `return_dict` is `True`, [`~pipelines.ImagePipelineOutput`] is returned, otherwise a `tuple` is returned

+ where the first element is a list with the generated images.

+ """

+

+ height = height or self.default_sample_size * self.vae_scale_factor

+ width = width or self.default_sample_size * self.vae_scale_factor

+ num_cfg = 2 if input_images is not None else 1

+ use_img_cfg = True if input_images is not None else False

+ if isinstance(prompt, str):

+ prompt = [prompt]

+ input_images = [input_images]

+

+ # 1. Check inputs. Raise error if not correct

+ self.check_inputs(

+ prompt,

+ input_images,

+ height,

+ width,

+ use_input_image_size_as_output,

+ callback_on_step_end_tensor_inputs=callback_on_step_end_tensor_inputs,

+ max_sequence_length=max_sequence_length,

+ )

+

+ self._guidance_scale = guidance_scale

+ self._attention_kwargs = attention_kwargs

+ self._interrupt = False

+

+ # 2. Define call parameters

+ batch_size = len(prompt)

+ device = self._execution_device

+

+ # 3. process multi-modal instructions

+ if max_input_image_size != self.multimodal_processor.max_image_size:

+ self.multimodal_processor.reset_max_image_size(max_image_size=max_input_image_size)

+ processed_data = self.multimodal_processor(

+ prompt,

+ input_images,

+ height=height,

+ width=width,

+ use_img_cfg=use_img_cfg,

+ use_input_image_size_as_output=use_input_image_size_as_output,

+ num_images_per_prompt=num_images_per_prompt,

+ )

+ processed_data["input_ids"] = processed_data["input_ids"].to(device)

+ processed_data["attention_mask"] = processed_data["attention_mask"].to(device)

+ processed_data["position_ids"] = processed_data["position_ids"].to(device)

+

+ # 4. Encode input images

+ input_img_latents = self.encode_input_images(processed_data["input_pixel_values"], device=device)

+

+ # 5. Prepare timesteps

+ sigmas = np.linspace(1, 0, num_inference_steps + 1)[:num_inference_steps]

+ timesteps, num_inference_steps = retrieve_timesteps(

+ self.scheduler, num_inference_steps, device, timesteps, sigmas=sigmas

+ )

+ self._num_timesteps = len(timesteps)

+

+ # 6. Prepare latents.

+ if use_input_image_size_as_output:

+ height, width = processed_data["input_pixel_values"][0].shape[-2:]

+ latent_channels = self.transformer.config.in_channels

+ latents = self.prepare_latents(

+ batch_size * num_images_per_prompt,

+ latent_channels,

+ height,

+ width,

+ self.transformer.dtype,

+ device,

+ generator,

+ latents,

+ )

+

+ # 8. Denoising loop

+ with self.progress_bar(total=num_inference_steps) as progress_bar:

+ for i, t in enumerate(timesteps):

+ # expand the latents if we are doing classifier free guidance

+ latent_model_input = torch.cat([latents] * (num_cfg + 1))

+

+ # broadcast to batch dimension in a way that's compatible with ONNX/Core ML

+ timestep = t.expand(latent_model_input.shape[0])

+

+ noise_pred = self.transformer(

+ hidden_states=latent_model_input,

+ timestep=timestep,

+ input_ids=processed_data["input_ids"],

+ input_img_latents=input_img_latents,

+ input_image_sizes=processed_data["input_image_sizes"],

+ attention_mask=processed_data["attention_mask"],

+ position_ids=processed_data["position_ids"],

+ attention_kwargs=attention_kwargs,

+ return_dict=False,

+ )[0]

+

+ if num_cfg == 2:

+ cond, uncond, img_cond = torch.split(noise_pred, len(noise_pred) // 3, dim=0)

+ noise_pred = uncond + img_guidance_scale * (img_cond - uncond) + guidance_scale * (cond - img_cond)

+ else:

+ cond, uncond = torch.split(noise_pred, len(noise_pred) // 2, dim=0)

+ noise_pred = uncond + guidance_scale * (cond - uncond)

+

+ # compute the previous noisy sample x_t -> x_t-1

+ latents_dtype = latents.dtype

+ latents = self.scheduler.step(noise_pred, t, latents, return_dict=False)[0]

+

+ if callback_on_step_end is not None:

+ callback_kwargs = {}

+ for k in callback_on_step_end_tensor_inputs:

+ callback_kwargs[k] = locals()[k]

+ callback_outputs = callback_on_step_end(self, i, t, callback_kwargs)

+

+ latents = callback_outputs.pop("latents", latents)

+

+ if latents.dtype != latents_dtype:

+ if torch.backends.mps.is_available():

+ # some platforms (eg. apple mps) misbehave due to a pytorch bug: https://github.com/pytorch/pytorch/pull/99272

+ latents = latents.to(latents_dtype)

+

+ progress_bar.update()

+

+ if not output_type == "latent":

+ latents = latents.to(self.vae.dtype)

+ latents = latents / self.vae.config.scaling_factor

+ image = self.vae.decode(latents, return_dict=False)[0]

+ image = self.image_processor.postprocess(image, output_type=output_type)

+ else:

+ image = latents

+

+ # Offload all models

+ self.maybe_free_model_hooks()

+

+ if not return_dict:

+ return (image,)

+

+ return ImagePipelineOutput(images=image)

diff --git a/src/diffusers/pipelines/omnigen/processor_omnigen.py b/src/diffusers/pipelines/omnigen/processor_omnigen.py

new file mode 100644

index 000000000000..75d272ac5140

--- /dev/null

+++ b/src/diffusers/pipelines/omnigen/processor_omnigen.py

@@ -0,0 +1,327 @@

+# Copyright 2024 OmniGen team and The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import re

+from typing import Dict, List

+

+import numpy as np

+import torch

+from PIL import Image

+from torchvision import transforms

+

+

+def crop_image(pil_image, max_image_size):

+ """

+ Crop the image so that its height and width does not exceed `max_image_size`, while ensuring both the height and

+ width are multiples of 16.

+ """

+ while min(*pil_image.size) >= 2 * max_image_size:

+ pil_image = pil_image.resize(tuple(x // 2 for x in pil_image.size), resample=Image.BOX)

+

+ if max(*pil_image.size) > max_image_size:

+ scale = max_image_size / max(*pil_image.size)

+ pil_image = pil_image.resize(tuple(round(x * scale) for x in pil_image.size), resample=Image.BICUBIC)

+

+ if min(*pil_image.size) < 16:

+ scale = 16 / min(*pil_image.size)

+ pil_image = pil_image.resize(tuple(round(x * scale) for x in pil_image.size), resample=Image.BICUBIC)

+

+ arr = np.array(pil_image)

+ crop_y1 = (arr.shape[0] % 16) // 2

+ crop_y2 = arr.shape[0] % 16 - crop_y1

+

+ crop_x1 = (arr.shape[1] % 16) // 2

+ crop_x2 = arr.shape[1] % 16 - crop_x1

+

+ arr = arr[crop_y1 : arr.shape[0] - crop_y2, crop_x1 : arr.shape[1] - crop_x2]

+ return Image.fromarray(arr)

+

+

+class OmniGenMultiModalProcessor:

+ def __init__(self, text_tokenizer, max_image_size: int = 1024):

+ self.text_tokenizer = text_tokenizer

+ self.max_image_size = max_image_size

+

+ self.image_transform = transforms.Compose(

+ [

+ transforms.Lambda(lambda pil_image: crop_image(pil_image, max_image_size)),

+ transforms.ToTensor(),

+ transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5], inplace=True),

+ ]

+ )

+

+ self.collator = OmniGenCollator()

+

+ def reset_max_image_size(self, max_image_size):

+ self.max_image_size = max_image_size

+ self.image_transform = transforms.Compose(

+ [

+ transforms.Lambda(lambda pil_image: crop_image(pil_image, max_image_size)),

+ transforms.ToTensor(),

+ transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5], inplace=True),

+ ]

+ )

+

+ def process_image(self, image):

+ if isinstance(image, str):

+ image = Image.open(image).convert("RGB")

+ return self.image_transform(image)

+

+ def process_multi_modal_prompt(self, text, input_images):

+ text = self.add_prefix_instruction(text)

+ if input_images is None or len(input_images) == 0:

+ model_inputs = self.text_tokenizer(text)

+ return {"input_ids": model_inputs.input_ids, "pixel_values": None, "image_sizes": None}

+

+ pattern = r"<\|image_\d+\|>"

+ prompt_chunks = [self.text_tokenizer(chunk).input_ids for chunk in re.split(pattern, text)]

+

+ for i in range(1, len(prompt_chunks)):

+ if prompt_chunks[i][0] == 1:

+ prompt_chunks[i] = prompt_chunks[i][1:]

+

+ image_tags = re.findall(pattern, text)

+ image_ids = [int(s.split("|")[1].split("_")[-1]) for s in image_tags]

+

+ unique_image_ids = sorted(set(image_ids))

+ assert unique_image_ids == list(

+ range(1, len(unique_image_ids) + 1)

+ ), f"image_ids must start from 1, and must be continuous int, e.g. [1, 2, 3], cannot be {unique_image_ids}"

+ # total images must be the same as the number of image tags

+ assert (

+ len(unique_image_ids) == len(input_images)

+ ), f"total images must be the same as the number of image tags, got {len(unique_image_ids)} image tags and {len(input_images)} images"

+

+ input_images = [input_images[x - 1] for x in image_ids]

+

+ all_input_ids = []

+ img_inx = []

+ for i in range(len(prompt_chunks)):

+ all_input_ids.extend(prompt_chunks[i])

+ if i != len(prompt_chunks) - 1:

+ start_inx = len(all_input_ids)

+ size = input_images[i].size(-2) * input_images[i].size(-1) // 16 // 16

+ img_inx.append([start_inx, start_inx + size])

+ all_input_ids.extend([0] * size)

+

+ return {"input_ids": all_input_ids, "pixel_values": input_images, "image_sizes": img_inx}

+

+ def add_prefix_instruction(self, prompt):

+ user_prompt = "<|user|>\n"

+ generation_prompt = "Generate an image according to the following instructions\n"

+ assistant_prompt = "<|assistant|>\n<|diffusion|>"

+ prompt_suffix = "<|end|>\n"

+ prompt = f"{user_prompt}{generation_prompt}{prompt}{prompt_suffix}{assistant_prompt}"

+ return prompt

+

+ def __call__(

+ self,

+ instructions: List[str],

+ input_images: List[List[str]] = None,

+ height: int = 1024,

+ width: int = 1024,

+ negative_prompt: str = "low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers.",

+ use_img_cfg: bool = True,

+ separate_cfg_input: bool = False,

+ use_input_image_size_as_output: bool = False,

+ num_images_per_prompt: int = 1,

+ ) -> Dict:

+ if isinstance(instructions, str):

+ instructions = [instructions]

+ input_images = [input_images]

+

+ input_data = []

+ for i in range(len(instructions)):

+ cur_instruction = instructions[i]

+ cur_input_images = None if input_images is None else input_images[i]

+ if cur_input_images is not None and len(cur_input_images) > 0:

+ cur_input_images = [self.process_image(x) for x in cur_input_images]

+ else:

+ cur_input_images = None

+ assert "

<|image_i|>` in the prompt to indicate the position of the i-th images.

+ input_images (`PipelineImageInput` or `List[PipelineImageInput]`, *optional*):

+ The list of input images. We will replace the "<|image_i|>" in prompt with the i-th image in list.

+ height (`int`, *optional*, defaults to self.unet.config.sample_size * self.vae_scale_factor):

+ The height in pixels of the generated image. This is set to 1024 by default for the best results.

+ width (`int`, *optional*, defaults to self.unet.config.sample_size * self.vae_scale_factor):

+ The width in pixels of the generated image. This is set to 1024 by default for the best results.

+ num_inference_steps (`int`, *optional*, defaults to 50):

+ The number of denoising steps. More denoising steps usually lead to a higher quality image at the

+ expense of slower inference.

+ max_input_image_size (`int`, *optional*, defaults to 1024):

+ the maximum size of input image, which will be used to crop the input image to the maximum size

+ timesteps (`List[int]`, *optional*):

+ Custom timesteps to use for the denoising process with schedulers which support a `timesteps` argument

+ in their `set_timesteps` method. If not defined, the default behavior when `num_inference_steps` is

+ passed will be used. Must be in descending order.

+ guidance_scale (`float`, *optional*, defaults to 2.5):

+ Guidance scale as defined in [Classifier-Free Diffusion Guidance](https://arxiv.org/abs/2207.12598).

+ `guidance_scale` is defined as `w` of equation 2. of [Imagen

+ Paper](https://arxiv.org/pdf/2205.11487.pdf). Guidance scale is enabled by setting `guidance_scale >

+ 1`. Higher guidance scale encourages to generate images that are closely linked to the text `prompt`,

+ usually at the expense of lower image quality.

+ img_guidance_scale (`float`, *optional*, defaults to 1.6):

+ Defined as equation 3 in [Instrucpix2pix](https://arxiv.org/pdf/2211.09800).

+ use_input_image_size_as_output (bool, defaults to False):

+ whether to use the input image size as the output image size, which can be used for single-image input,

+ e.g., image editing task

+ num_images_per_prompt (`int`, *optional*, defaults to 1):

+ The number of images to generate per prompt.

+ generator (`torch.Generator` or `List[torch.Generator]`, *optional*):

+ One or a list of [torch generator(s)](https://pytorch.org/docs/stable/generated/torch.Generator.html)

+ to make generation deterministic.

+ latents (`torch.Tensor`, *optional*):

+ Pre-generated noisy latents, sampled from a Gaussian distribution, to be used as inputs for image

+ generation. Can be used to tweak the same generation with different prompts. If not provided, a latents

+ tensor will ge generated by sampling using the supplied random `generator`.

+ output_type (`str`, *optional*, defaults to `"pil"`):

+ The output format of the generate image. Choose between

+ [PIL](https://pillow.readthedocs.io/en/stable/): `PIL.Image.Image` or `np.array`.

+ return_dict (`bool`, *optional*, defaults to `True`):

+ Whether or not to return a [`~pipelines.flux.FluxPipelineOutput`] instead of a plain tuple.

+ attention_kwargs (`dict`, *optional*):

+ A kwargs dictionary that if specified is passed along to the `AttentionProcessor` as defined under

+ `self.processor` in

+ [diffusers.models.attention_processor](https://github.com/huggingface/diffusers/blob/main/src/diffusers/models/attention_processor.py).

+ callback_on_step_end (`Callable`, *optional*):

+ A function that calls at the end of each denoising steps during the inference. The function is called

+ with the following arguments: `callback_on_step_end(self: DiffusionPipeline, step: int, timestep: int,

+ callback_kwargs: Dict)`. `callback_kwargs` will include a list of all tensors as specified by

+ `callback_on_step_end_tensor_inputs`.

+ callback_on_step_end_tensor_inputs (`List`, *optional*):

+ The list of tensor inputs for the `callback_on_step_end` function. The tensors specified in the list

+ will be passed as `callback_kwargs` argument. You will only be able to include variables listed in the

+ `._callback_tensor_inputs` attribute of your pipeline class.

+ max_sequence_length (`int` defaults to 512): Maximum sequence length to use with the `prompt`.

+

+ Examples:

+

+ Returns: [`~pipelines.ImagePipelineOutput`] or `tuple`:

+ If `return_dict` is `True`, [`~pipelines.ImagePipelineOutput`] is returned, otherwise a `tuple` is returned

+ where the first element is a list with the generated images.

+ """

+

+ height = height or self.default_sample_size * self.vae_scale_factor

+ width = width or self.default_sample_size * self.vae_scale_factor

+ num_cfg = 2 if input_images is not None else 1

+ use_img_cfg = True if input_images is not None else False

+ if isinstance(prompt, str):

+ prompt = [prompt]

+ input_images = [input_images]

+

+ # 1. Check inputs. Raise error if not correct

+ self.check_inputs(

+ prompt,

+ input_images,

+ height,

+ width,

+ use_input_image_size_as_output,

+ callback_on_step_end_tensor_inputs=callback_on_step_end_tensor_inputs,

+ max_sequence_length=max_sequence_length,

+ )

+

+ self._guidance_scale = guidance_scale

+ self._attention_kwargs = attention_kwargs

+ self._interrupt = False

+

+ # 2. Define call parameters

+ batch_size = len(prompt)

+ device = self._execution_device

+

+ # 3. process multi-modal instructions

+ if max_input_image_size != self.multimodal_processor.max_image_size:

+ self.multimodal_processor.reset_max_image_size(max_image_size=max_input_image_size)

+ processed_data = self.multimodal_processor(

+ prompt,

+ input_images,

+ height=height,

+ width=width,

+ use_img_cfg=use_img_cfg,

+ use_input_image_size_as_output=use_input_image_size_as_output,

+ num_images_per_prompt=num_images_per_prompt,

+ )

+ processed_data["input_ids"] = processed_data["input_ids"].to(device)

+ processed_data["attention_mask"] = processed_data["attention_mask"].to(device)

+ processed_data["position_ids"] = processed_data["position_ids"].to(device)

+

+ # 4. Encode input images

+ input_img_latents = self.encode_input_images(processed_data["input_pixel_values"], device=device)

+

+ # 5. Prepare timesteps

+ sigmas = np.linspace(1, 0, num_inference_steps + 1)[:num_inference_steps]

+ timesteps, num_inference_steps = retrieve_timesteps(

+ self.scheduler, num_inference_steps, device, timesteps, sigmas=sigmas

+ )

+ self._num_timesteps = len(timesteps)

+

+ # 6. Prepare latents.

+ if use_input_image_size_as_output:

+ height, width = processed_data["input_pixel_values"][0].shape[-2:]

+ latent_channels = self.transformer.config.in_channels

+ latents = self.prepare_latents(

+ batch_size * num_images_per_prompt,

+ latent_channels,

+ height,

+ width,

+ self.transformer.dtype,

+ device,

+ generator,

+ latents,

+ )

+

+ # 8. Denoising loop

+ with self.progress_bar(total=num_inference_steps) as progress_bar:

+ for i, t in enumerate(timesteps):

+ # expand the latents if we are doing classifier free guidance

+ latent_model_input = torch.cat([latents] * (num_cfg + 1))

+

+ # broadcast to batch dimension in a way that's compatible with ONNX/Core ML

+ timestep = t.expand(latent_model_input.shape[0])

+

+ noise_pred = self.transformer(

+ hidden_states=latent_model_input,

+ timestep=timestep,

+ input_ids=processed_data["input_ids"],

+ input_img_latents=input_img_latents,

+ input_image_sizes=processed_data["input_image_sizes"],

+ attention_mask=processed_data["attention_mask"],

+ position_ids=processed_data["position_ids"],

+ attention_kwargs=attention_kwargs,

+ return_dict=False,

+ )[0]

+

+ if num_cfg == 2:

+ cond, uncond, img_cond = torch.split(noise_pred, len(noise_pred) // 3, dim=0)

+ noise_pred = uncond + img_guidance_scale * (img_cond - uncond) + guidance_scale * (cond - img_cond)

+ else:

+ cond, uncond = torch.split(noise_pred, len(noise_pred) // 2, dim=0)

+ noise_pred = uncond + guidance_scale * (cond - uncond)

+

+ # compute the previous noisy sample x_t -> x_t-1

+ latents_dtype = latents.dtype

+ latents = self.scheduler.step(noise_pred, t, latents, return_dict=False)[0]

+

+ if callback_on_step_end is not None:

+ callback_kwargs = {}

+ for k in callback_on_step_end_tensor_inputs:

+ callback_kwargs[k] = locals()[k]

+ callback_outputs = callback_on_step_end(self, i, t, callback_kwargs)

+

+ latents = callback_outputs.pop("latents", latents)

+

+ if latents.dtype != latents_dtype:

+ if torch.backends.mps.is_available():

+ # some platforms (eg. apple mps) misbehave due to a pytorch bug: https://github.com/pytorch/pytorch/pull/99272

+ latents = latents.to(latents_dtype)

+

+ progress_bar.update()

+

+ if not output_type == "latent":

+ latents = latents.to(self.vae.dtype)

+ latents = latents / self.vae.config.scaling_factor

+ image = self.vae.decode(latents, return_dict=False)[0]

+ image = self.image_processor.postprocess(image, output_type=output_type)

+ else:

+ image = latents

+

+ # Offload all models

+ self.maybe_free_model_hooks()

+

+ if not return_dict:

+ return (image,)

+

+ return ImagePipelineOutput(images=image)

diff --git a/src/diffusers/pipelines/omnigen/processor_omnigen.py b/src/diffusers/pipelines/omnigen/processor_omnigen.py

new file mode 100644

index 000000000000..75d272ac5140

--- /dev/null

+++ b/src/diffusers/pipelines/omnigen/processor_omnigen.py

@@ -0,0 +1,327 @@

+# Copyright 2024 OmniGen team and The HuggingFace Team. All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import re

+from typing import Dict, List

+

+import numpy as np

+import torch

+from PIL import Image

+from torchvision import transforms

+

+

+def crop_image(pil_image, max_image_size):

+ """

+ Crop the image so that its height and width does not exceed `max_image_size`, while ensuring both the height and

+ width are multiples of 16.

+ """

+ while min(*pil_image.size) >= 2 * max_image_size:

+ pil_image = pil_image.resize(tuple(x // 2 for x in pil_image.size), resample=Image.BOX)

+

+ if max(*pil_image.size) > max_image_size:

+ scale = max_image_size / max(*pil_image.size)

+ pil_image = pil_image.resize(tuple(round(x * scale) for x in pil_image.size), resample=Image.BICUBIC)

+

+ if min(*pil_image.size) < 16:

+ scale = 16 / min(*pil_image.size)

+ pil_image = pil_image.resize(tuple(round(x * scale) for x in pil_image.size), resample=Image.BICUBIC)

+

+ arr = np.array(pil_image)

+ crop_y1 = (arr.shape[0] % 16) // 2

+ crop_y2 = arr.shape[0] % 16 - crop_y1

+

+ crop_x1 = (arr.shape[1] % 16) // 2

+ crop_x2 = arr.shape[1] % 16 - crop_x1

+

+ arr = arr[crop_y1 : arr.shape[0] - crop_y2, crop_x1 : arr.shape[1] - crop_x2]

+ return Image.fromarray(arr)

+

+

+class OmniGenMultiModalProcessor:

+ def __init__(self, text_tokenizer, max_image_size: int = 1024):

+ self.text_tokenizer = text_tokenizer

+ self.max_image_size = max_image_size

+

+ self.image_transform = transforms.Compose(

+ [

+ transforms.Lambda(lambda pil_image: crop_image(pil_image, max_image_size)),

+ transforms.ToTensor(),

+ transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5], inplace=True),

+ ]

+ )

+

+ self.collator = OmniGenCollator()

+

+ def reset_max_image_size(self, max_image_size):

+ self.max_image_size = max_image_size

+ self.image_transform = transforms.Compose(

+ [

+ transforms.Lambda(lambda pil_image: crop_image(pil_image, max_image_size)),

+ transforms.ToTensor(),

+ transforms.Normalize(mean=[0.5, 0.5, 0.5], std=[0.5, 0.5, 0.5], inplace=True),

+ ]

+ )

+

+ def process_image(self, image):

+ if isinstance(image, str):

+ image = Image.open(image).convert("RGB")

+ return self.image_transform(image)

+

+ def process_multi_modal_prompt(self, text, input_images):

+ text = self.add_prefix_instruction(text)

+ if input_images is None or len(input_images) == 0:

+ model_inputs = self.text_tokenizer(text)

+ return {"input_ids": model_inputs.input_ids, "pixel_values": None, "image_sizes": None}

+

+ pattern = r"<\|image_\d+\|>"

+ prompt_chunks = [self.text_tokenizer(chunk).input_ids for chunk in re.split(pattern, text)]

+

+ for i in range(1, len(prompt_chunks)):

+ if prompt_chunks[i][0] == 1:

+ prompt_chunks[i] = prompt_chunks[i][1:]

+

+ image_tags = re.findall(pattern, text)

+ image_ids = [int(s.split("|")[1].split("_")[-1]) for s in image_tags]

+

+ unique_image_ids = sorted(set(image_ids))

+ assert unique_image_ids == list(

+ range(1, len(unique_image_ids) + 1)

+ ), f"image_ids must start from 1, and must be continuous int, e.g. [1, 2, 3], cannot be {unique_image_ids}"

+ # total images must be the same as the number of image tags

+ assert (

+ len(unique_image_ids) == len(input_images)

+ ), f"total images must be the same as the number of image tags, got {len(unique_image_ids)} image tags and {len(input_images)} images"

+

+ input_images = [input_images[x - 1] for x in image_ids]

+

+ all_input_ids = []

+ img_inx = []

+ for i in range(len(prompt_chunks)):

+ all_input_ids.extend(prompt_chunks[i])

+ if i != len(prompt_chunks) - 1:

+ start_inx = len(all_input_ids)

+ size = input_images[i].size(-2) * input_images[i].size(-1) // 16 // 16

+ img_inx.append([start_inx, start_inx + size])

+ all_input_ids.extend([0] * size)

+

+ return {"input_ids": all_input_ids, "pixel_values": input_images, "image_sizes": img_inx}

+

+ def add_prefix_instruction(self, prompt):

+ user_prompt = "<|user|>\n"

+ generation_prompt = "Generate an image according to the following instructions\n"

+ assistant_prompt = "<|assistant|>\n<|diffusion|>"

+ prompt_suffix = "<|end|>\n"

+ prompt = f"{user_prompt}{generation_prompt}{prompt}{prompt_suffix}{assistant_prompt}"

+ return prompt

+

+ def __call__(

+ self,

+ instructions: List[str],

+ input_images: List[List[str]] = None,

+ height: int = 1024,

+ width: int = 1024,

+ negative_prompt: str = "low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers.",

+ use_img_cfg: bool = True,

+ separate_cfg_input: bool = False,

+ use_input_image_size_as_output: bool = False,

+ num_images_per_prompt: int = 1,

+ ) -> Dict:

+ if isinstance(instructions, str):

+ instructions = [instructions]

+ input_images = [input_images]

+

+ input_data = []

+ for i in range(len(instructions)):

+ cur_instruction = instructions[i]

+ cur_input_images = None if input_images is None else input_images[i]

+ if cur_input_images is not None and len(cur_input_images) > 0:

+ cur_input_images = [self.process_image(x) for x in cur_input_images]

+ else:

+ cur_input_images = None

+ assert "![]() <|image_1|>" not in cur_instruction

+

+ mllm_input = self.process_multi_modal_prompt(cur_instruction, cur_input_images)

+

+ neg_mllm_input, img_cfg_mllm_input = None, None

+ neg_mllm_input = self.process_multi_modal_prompt(negative_prompt, None)

+ if use_img_cfg:

+ if cur_input_images is not None and len(cur_input_images) >= 1:

+ img_cfg_prompt = [f"

<|image_1|>" not in cur_instruction

+

+ mllm_input = self.process_multi_modal_prompt(cur_instruction, cur_input_images)

+

+ neg_mllm_input, img_cfg_mllm_input = None, None

+ neg_mllm_input = self.process_multi_modal_prompt(negative_prompt, None)

+ if use_img_cfg:

+ if cur_input_images is not None and len(cur_input_images) >= 1:

+ img_cfg_prompt = [f"![]() <|image_{i + 1}|>" for i in range(len(cur_input_images))]

+ img_cfg_mllm_input = self.process_multi_modal_prompt(" ".join(img_cfg_prompt), cur_input_images)

+ else:

+ img_cfg_mllm_input = neg_mllm_input

+

+ for _ in range(num_images_per_prompt):

+ if use_input_image_size_as_output:

+ input_data.append(

+ (

+ mllm_input,

+ neg_mllm_input,

+ img_cfg_mllm_input,

+ [mllm_input["pixel_values"][0].size(-2), mllm_input["pixel_values"][0].size(-1)],

+ )

+ )

+ else:

+ input_data.append((mllm_input, neg_mllm_input, img_cfg_mllm_input, [height, width]))

+

+ return self.collator(input_data)

+

+

+class OmniGenCollator:

+ def __init__(self, pad_token_id=2, hidden_size=3072):

+ self.pad_token_id = pad_token_id

+ self.hidden_size = hidden_size

+

+ def create_position(self, attention_mask, num_tokens_for_output_images):

+ position_ids = []

+ text_length = attention_mask.size(-1)

+ img_length = max(num_tokens_for_output_images)

+ for mask in attention_mask:

+ temp_l = torch.sum(mask)

+ temp_position = [0] * (text_length - temp_l) + list(

+ range(temp_l + img_length + 1)

+ ) # we add a time embedding into the sequence, so add one more token

+ position_ids.append(temp_position)

+ return torch.LongTensor(position_ids)

+

+ def create_mask(self, attention_mask, num_tokens_for_output_images):

+ """

+ OmniGen applies causal attention to each element in the sequence, but applies bidirectional attention within

+ each image sequence References: [OmniGen](https://arxiv.org/pdf/2409.11340)

+ """

+ extended_mask = []

+ padding_images = []

+ text_length = attention_mask.size(-1)

+ img_length = max(num_tokens_for_output_images)

+ seq_len = text_length + img_length + 1 # we add a time embedding into the sequence, so add one more token

+ inx = 0

+ for mask in attention_mask:

+ temp_l = torch.sum(mask)

+ pad_l = text_length - temp_l

+

+ temp_mask = torch.tril(torch.ones(size=(temp_l + 1, temp_l + 1)))

+

+ image_mask = torch.zeros(size=(temp_l + 1, img_length))

+ temp_mask = torch.cat([temp_mask, image_mask], dim=-1)

+

+ image_mask = torch.ones(size=(img_length, temp_l + img_length + 1))

+ temp_mask = torch.cat([temp_mask, image_mask], dim=0)

+

+ if pad_l > 0:

+ pad_mask = torch.zeros(size=(temp_l + 1 + img_length, pad_l))

+ temp_mask = torch.cat([pad_mask, temp_mask], dim=-1)

+

+ pad_mask = torch.ones(size=(pad_l, seq_len))

+ temp_mask = torch.cat([pad_mask, temp_mask], dim=0)

+

+ true_img_length = num_tokens_for_output_images[inx]

+ pad_img_length = img_length - true_img_length

+ if pad_img_length > 0:

+ temp_mask[:, -pad_img_length:] = 0

+ temp_padding_imgs = torch.zeros(size=(1, pad_img_length, self.hidden_size))

+ else:

+ temp_padding_imgs = None

+

+ extended_mask.append(temp_mask.unsqueeze(0))

+ padding_images.append(temp_padding_imgs)

+ inx += 1

+ return torch.cat(extended_mask, dim=0), padding_images

+

+ def adjust_attention_for_input_images(self, attention_mask, image_sizes):

+ for b_inx in image_sizes.keys():

+ for start_inx, end_inx in image_sizes[b_inx]:

+ attention_mask[b_inx][start_inx:end_inx, start_inx:end_inx] = 1

+

+ return attention_mask

+

+ def pad_input_ids(self, input_ids, image_sizes):

+ max_l = max([len(x) for x in input_ids])

+ padded_ids = []

+ attention_mask = []

+

+ for i in range(len(input_ids)):

+ temp_ids = input_ids[i]

+ temp_l = len(temp_ids)

+ pad_l = max_l - temp_l

+ if pad_l == 0:

+ attention_mask.append([1] * max_l)

+ padded_ids.append(temp_ids)

+ else:

+ attention_mask.append([0] * pad_l + [1] * temp_l)

+ padded_ids.append([self.pad_token_id] * pad_l + temp_ids)

+

+ if i in image_sizes:

+ new_inx = []

+ for old_inx in image_sizes[i]:

+ new_inx.append([x + pad_l for x in old_inx])

+ image_sizes[i] = new_inx

+

+ return torch.LongTensor(padded_ids), torch.LongTensor(attention_mask), image_sizes

+

+ def process_mllm_input(self, mllm_inputs, target_img_size):

+ num_tokens_for_output_images = []

+ for img_size in target_img_size:

+ num_tokens_for_output_images.append(img_size[0] * img_size[1] // 16 // 16)

+

+ pixel_values, image_sizes = [], {}

+ b_inx = 0

+ for x in mllm_inputs:

+ if x["pixel_values"] is not None:

+ pixel_values.extend(x["pixel_values"])

+ for size in x["image_sizes"]:

+ if b_inx not in image_sizes:

+ image_sizes[b_inx] = [size]

+ else:

+ image_sizes[b_inx].append(size)

+ b_inx += 1

+ pixel_values = [x.unsqueeze(0) for x in pixel_values]

+

+ input_ids = [x["input_ids"] for x in mllm_inputs]

+ padded_input_ids, attention_mask, image_sizes = self.pad_input_ids(input_ids, image_sizes)

+ position_ids = self.create_position(attention_mask, num_tokens_for_output_images)

+ attention_mask, padding_images = self.create_mask(attention_mask, num_tokens_for_output_images)

+ attention_mask = self.adjust_attention_for_input_images(attention_mask, image_sizes)

+

+ return padded_input_ids, position_ids, attention_mask, padding_images, pixel_values, image_sizes

+

+ def __call__(self, features):

+ mllm_inputs = [f[0] for f in features]

+ cfg_mllm_inputs = [f[1] for f in features]

+ img_cfg_mllm_input = [f[2] for f in features]

+ target_img_size = [f[3] for f in features]

+

+ if img_cfg_mllm_input[0] is not None:

+ mllm_inputs = mllm_inputs + cfg_mllm_inputs + img_cfg_mllm_input

+ target_img_size = target_img_size + target_img_size + target_img_size

+ else:

+ mllm_inputs = mllm_inputs + cfg_mllm_inputs

+ target_img_size = target_img_size + target_img_size

+

+ (

+ all_padded_input_ids,

+ all_position_ids,

+ all_attention_mask,

+ all_padding_images,

+ all_pixel_values,

+ all_image_sizes,

+ ) = self.process_mllm_input(mllm_inputs, target_img_size)

+

+ data = {

+ "input_ids": all_padded_input_ids,

+ "attention_mask": all_attention_mask,

+ "position_ids": all_position_ids,

+ "input_pixel_values": all_pixel_values,

+ "input_image_sizes": all_image_sizes,

+ }

+ return data

diff --git a/src/diffusers/utils/dummy_pt_objects.py b/src/diffusers/utils/dummy_pt_objects.py

index 6a1978944c9f..671ab63c9ef3 100644

--- a/src/diffusers/utils/dummy_pt_objects.py

+++ b/src/diffusers/utils/dummy_pt_objects.py

@@ -621,6 +621,21 @@ def from_pretrained(cls, *args, **kwargs):

requires_backends(cls, ["torch"])

+class OmniGenTransformer2DModel(metaclass=DummyObject):

+ _backends = ["torch"]

+

+ def __init__(self, *args, **kwargs):

+ requires_backends(self, ["torch"])

+

+ @classmethod

+ def from_config(cls, *args, **kwargs):

+ requires_backends(cls, ["torch"])

+

+ @classmethod

+ def from_pretrained(cls, *args, **kwargs):

+ requires_backends(cls, ["torch"])

+

+

class PixArtTransformer2DModel(metaclass=DummyObject):

_backends = ["torch"]

diff --git a/src/diffusers/utils/dummy_torch_and_transformers_objects.py b/src/diffusers/utils/dummy_torch_and_transformers_objects.py

index b899915c3046..29ebd554223c 100644

--- a/src/diffusers/utils/dummy_torch_and_transformers_objects.py

+++ b/src/diffusers/utils/dummy_torch_and_transformers_objects.py

@@ -1217,6 +1217,21 @@ def from_pretrained(cls, *args, **kwargs):

requires_backends(cls, ["torch", "transformers"])

+class OmniGenPipeline(metaclass=DummyObject):

+ _backends = ["torch", "transformers"]

+

+ def __init__(self, *args, **kwargs):

+ requires_backends(self, ["torch", "transformers"])

+

+ @classmethod

+ def from_config(cls, *args, **kwargs):

+ requires_backends(cls, ["torch", "transformers"])

+

+ @classmethod

+ def from_pretrained(cls, *args, **kwargs):

+ requires_backends(cls, ["torch", "transformers"])

+

+

class PaintByExamplePipeline(metaclass=DummyObject):

_backends = ["torch", "transformers"]

diff --git a/tests/models/transformers/test_models_transformer_omnigen.py b/tests/models/transformers/test_models_transformer_omnigen.py

new file mode 100644

index 000000000000..a7653f1f9d6d

--- /dev/null

+++ b/tests/models/transformers/test_models_transformer_omnigen.py

@@ -0,0 +1,88 @@

+# coding=utf-8

+# Copyright 2024 HuggingFace Inc.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+import unittest

+

+import torch

+

+from diffusers import OmniGenTransformer2DModel

+from diffusers.utils.testing_utils import enable_full_determinism, torch_device

+

+from ..test_modeling_common import ModelTesterMixin

+