-

-

Notifications

You must be signed in to change notification settings - Fork 4.8k

Very poor performance when using RedisCacheAdapter #5401

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

I believe parse uses the cache only for roles and sessions. No actual query results are saved or fetched from cache. For this:

Are you perhaps not using the default MongoDB readPreference? |

|

The data problem is indeed related to user, session and role. And after setting RedisCacheAdapter the data inconsistency is solved. The issue seems to be ONLY related to performace when distributed cache is on. Apparently the problem is because I rely on fields from Parse.Cloud.beforeSave('TableName', function(request, response) {

const account = request.user && request.user.get('account'); // <<< the account object is not present in the cached user

if (account && !request.object.has('account')) {

request.object.set('account', account);

}

} |

|

@ saulogt Oh ok, I understand now. Can you clarify what you mean by distributed cache ? It it a redis thing? or you mean without a Redis adapter? |

|

And one more thing, when using the default in-memory-cache and running multiple nodes. Now regarding your code snippet. Line 66 in f798a60

Anyhow, it seems like when the user is being updated, the cache is not being cleared, I don't know if I am mistaken, but in practice, it shouldn't be cleared. Data inconsistency should be tolerated in a cache. Otherwise, it would be a replicated database. |

|

Hey @georgesjamous . Thanks for your response. By "distributed cache", I actually meant "distributedly available and consistent cache with ReidsCache" :) And using RedisCacheAdapter actually caused the performance issue, but solved the data consistency problem. |

|

There is another case where I found a problem.

This case can also be solved with redis cache, despite the perf issue |

Yes exactly, in-memory cache is out of the question for a cluster environment |

|

ok, Just thinking out loud here... If you are sure that the "RedisCacheAdapter" is the problem and not something else... I was curious about how using the "RedisCacheAdapter" could cause performance issues if the Redis cluster and network can handle the load. I looked through the code of the adapter and found that all requests to Redis are being queued up and executed one by one. I don't know exactly what is the purpose of this, maybe to keep cache as consistent as possible. (chain operations) But if there is a capping problem, it must be in the adapter itself.

I suggest you try something out. Be aware, this may cause some inconsistent data since a Del request invoked before a Set but evaluated after, results in no data cached. But this could be solved by creating a Promise chain per cache key. That way all request for a "User:123456" would be chained up, this uses a greater amount of memory though. Worth a try. There is something like |

|

Interesting idea. |

|

@saulogt actually there is a problem in your code, the promise is executed as soon as it is initialized, no chaining is done on a key basis. Check this sample I created just to give you an idea...I haven't tested it |

|

@georgesjamous , you are totally right. |

|

Your problem solved @saulogt ? |

|

Hey @georgesjamous ! I hope to see a solution like this integrated to the this repo The timeouts still happen sometimes, but they don't crash the server. I didn't have time to debug them yet. |

|

@saulogt I'm glad that this worked for you. I will try to open-up a pull request modifying the default Redis Cache Adapter to reflect our modifications. The timeouts need to be debugged in another ticket since we are not sure yet if its an adapter problem |

|

Can we close this now? |

|

Closing via #5420 |

Issue Description

I'm running my Parse Server app on 4 Heroku dynos for some time now. It's a 10k requests per minute app.

I Recently noticed some issues where data were not found just after saving to parse. I immediately thought it would be related to caching, then I tested RedisCacheAdapter and the problem was solved. The documentation is not clear about the need to use a distributed cache. Should I use rediscache even on a (multi-cpu) clustered app?

After some time my app started to slow down to the point of getting timeouts. Adding more dynos didn't help

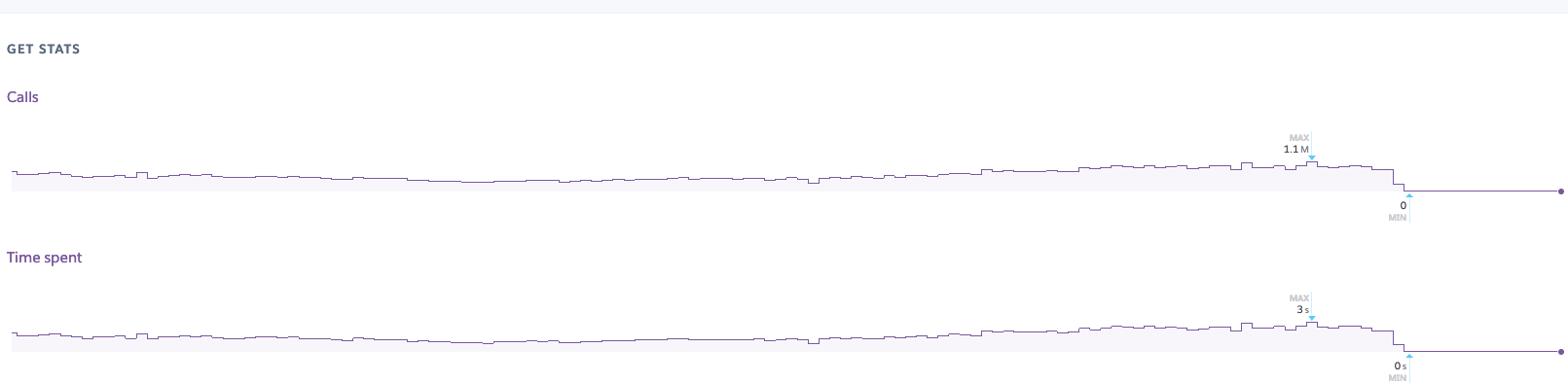

Heroku Redis (premium 0) metrics:

I'm under the impression that I reached the scale limit of parse server.

Steps to reproduce

Add a RedisCacheAdapter to a heavy multi-node app

Expected Results

Run smoothly

Actual Outcome

Timeouts

Environment Setup

Server

Database

Logs/Trace

The text was updated successfully, but these errors were encountered: