-

Notifications

You must be signed in to change notification settings - Fork 35

Pairwise distance calculation #241

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Hi @jeromekelleher, yes @jakirkham and I started a blog post last year but afraid I haven't had time to see it through. FWIW it's over here: dask/dask-blog#50 |

|

It would still be cool to finish that blog 😉 |

|

Just had a chat with @aktech, and he's going to have a look at this. |

|

Some of the potential options that I am looking at for dispatching: NEP 37

NEP 18

unumpy

|

|

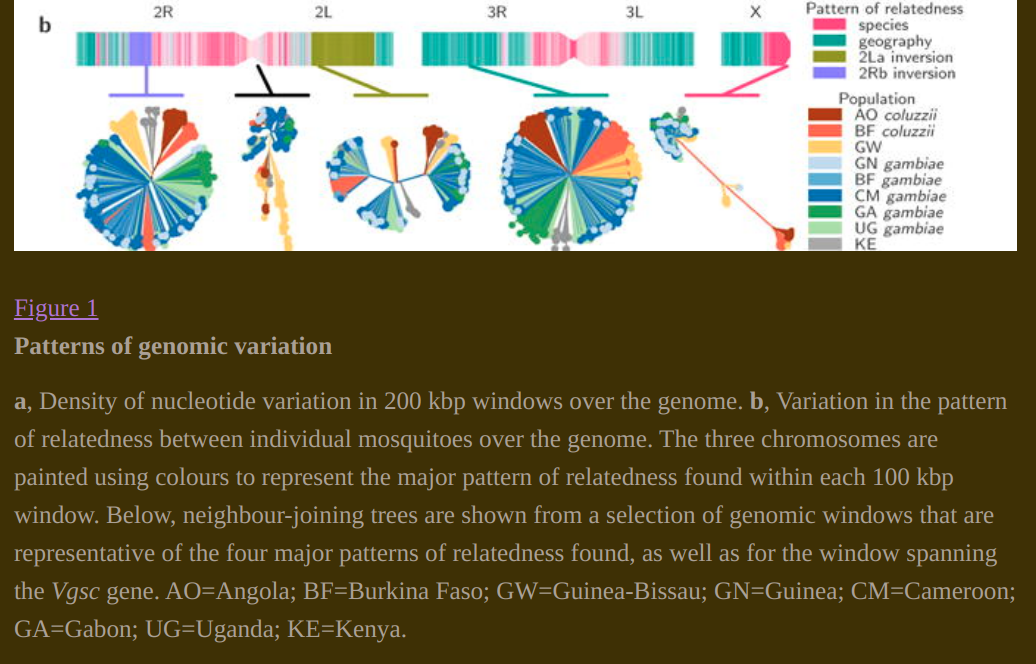

Just to add a bit more information about some motivating use cases. Prime motivation was to analyse population structure by computing pairwise genetic distance between individuals. From the distance matrix we would typically compute and visualise a neighbour joining tree. Here's what such a tree might look like: Taking this further, we also do an analysis where we compute pairwise distances in windows along the genome, and then compare those distance matrices to each other, to look for regions of the genome that convey similar (or different/unusual) patterns of relatedness. Here's a figure from our 2017 paper where we scanned the genome and painted it according to different patterns of relatedness: That analysis was done by computing a pairwise distance matrix for each 200 kbp window along the genome, then computing a pairwise correlation matrix between those distance matrices, then doing dimensionality reduction. |

|

The only way I can find to make this work with gufuncs (which again would cover the numpy/cupy backend cases well), is with broadcasting. This could actually be quite nice to build on if these benchmarks hold up on bigger datasets: from numba import guvectorize, njit

import dask.array as da

import numpy as np

# Generalized function that leaves pairwise definition up to caller

@guvectorize(['void(int8[:], int8[:], float64[:])'], '(n),(n)->()')

def corr1(x, y, out):

out[0] = np.corrcoef(x, y)[0, 1]

# A more typical pairwise compiled function

@njit

def corr2(x):

n = x.shape[0]

out = np.zeros((n, n), dtype=np.float64)

for i in range(n):

for j in range(n):

out[i, j] = np.corrcoef(x[i], x[j])[0, 1]

return out

# Make sure the results are the same for all functions

rs = da.random.RandomState(0)

x = rs.randint(0, 3, size=(500, 1000), dtype='i1')

c1 = corr1(x[:, None, :], x).compute() # Implicit broadcast creates pairs row vector pairs

c2 = corr2(x.compute())

c3 = np.corrcoef(x)

np.testing.assert_almost_equal(c1, c2)

np.testing.assert_almost_equal(c1, c3)

# Run some benchmarks

%%timeit

corr1(x[:, None, :], x).compute()

3.04 s ± 50.8 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

%%timeit

corr2(x.compute())

3.02 s ± 19 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)tl;dr numba.guvectorize can be used for pairwise calcs and is as fast as numba.njit for pairwise correlation on small datasets |

|

Thinking a little more about this, I believe there may be some utility in trying to find primitives like these to build on (the map/reduce idea I mentioned on the call yesterday): import numpy as np

import dask.array as da

from numba import guvectorize

rs = da.random.RandomState(0)

x = rs.randint(0, 3, size=(18, 40), dtype='i1').rechunk((6, 10))

# Pearson correlation "map" function for partial vector pairs

@guvectorize(['void(int8[:], int8[:], float64[:], float64[:])'], '(n),(n),(p)->(p)')

def corr_map(x, y, _, out):

out[:] = np.array([

np.sum(x),

np.sum(y),

np.sum(x * x),

np.sum(y * y),

np.sum(x * y),

len(x)

])

# Corresponding "reduce" function

@guvectorize(['void(float64[:,:], float64[:])'], '(p,m)->()')

def corr_reduce(v, out):

v = v.sum(axis=-1)

n = v[5]

num = n * v[4] - v[0] * v[1]

denom1 = np.sqrt(n * v[2] - v[0]**2)

denom2 = np.sqrt(n * v[3] - v[1]**2)

out[0] = num / (denom1 * denom2)

# A generic pairwise function for any separable distance metric

def pairwise(x, map_fn, reduce_fn, n_map_param):

# Pair rows of blocks together that correspond to the

# grid element in the resulting pairwise matrix before

# then computing partial calculations for each pair of

# row vectors within those paired blocks

x_map = da.concatenate([

da.concatenate([

da.stack([

map_fn(

x.blocks[i1, j][:, None, :],

x.blocks[i2, j],

np.empty(n_map_param))

for j in range(x.numblocks[1])

], axis=-1)

for i2 in range(x.numblocks[0])

], axis=1)

for i1 in range(x.numblocks[0])

], axis=0)

assert x_map.shape == (len(x), len(x), n_map_param, x.numblocks[1])

# Apply reduction to arrays with shape (n_map_param, n_column_chunk),

# which would easily fit in memory

x_reduce = reduce_fn(x_map.rechunk((None, None, -1, -1)))

return x_reduce

# There are 6 intermediate values in this case

x_corr = pairwise(x, corr_map, corr_reduce, 6)

np.testing.assert_almost_equal(x_corr.compute(), np.corrcoef(x).compute())

x_corr.compute().round(2)[:5, :5]

array([[ 1. , 0.09, -0.03, 0.14, 0.28],

[ 0.09, 1. , 0.04, 0.07, 0.03],

[-0.03, 0.04, 1. , 0.32, -0.25],

[ 0.14, 0.07, 0.32, 1. , 0.07],

[ 0.28, 0.03, -0.25, 0.07, 1. ]])The logic there is a bit gnarly and hard to put in words, but I it's not as complicated as it looks if there was an easy way to visualize it. This would support distance metrics on arrays with chunking in both dimensions and let us program conditional logic for sentinel values easily. That's particularly important given that even a simple metric like this doesn't have nan-aware support in dask (i.e. in da.corrcoef). And even if it did, it would only work if we promoted our 1-byte int types to larger floats. I view this as fairly important since at least being able to compute something like a genetic relatedness matrix on fully chunked arrays is something we ought to be able to do. I think this would also scale down well to cases where chunking is only in one dimension and at a glance, it looks like everything in https://github.com/scikit-allel/skallel-stats/blob/master/src/skallel_stats/distance/numpy_backend.py could be implemented this way. Computing only the upper or lower triangle for symmetric metrics would be tricky, but it's probably doable too. |

|

FWIW I did some work some time back on wrapping up distance computations with Dask in dask-distance, which may be of some use here. |

|

@eric-czech Thanks for the example, I thought I understood your map reduce idea and One question I have regarding this is consider an example of euclidean distance, For a given set of vectors:

What I don't clearly understand here is, what is the benefit of |

|

Hey @aktech, I don't see how the square root could be in the map step. What I would have imagined for euclidean distance is something like:

That's not great pseudocode but hopefully that makes some more sense. The map step would have to compress the two vectors down to some small number of scalars to be very useful. |

|

Looking at dask-distance some more (thanks for that bump @jakirkham!), it makes me wonder:

Do you have any advice for us on those @jakirkham? |

|

@eric-czech Thanks for the explanation. That makes sense. I think I |

Makes sense, I agree this would be unnecessary if the arrays aren't so big that you can fit them comfortably in memory along at least one dimension. |

|

Using |

|

Thanks again @jakirkham. On a related note, do you know an efficient way to go from a symmetric matrix to something like the dask-distance.squareform, but with column/row indexes in a dataframe? There are several use cases we have for that, and I'm wondering if there is a better way to do it than raveling the whole array, concatenating all the indexes to that 1D result (as in Dataset.to_dask_dataframe) and then filtering. E.g.: import xarray as xr

import numpy as np

dist = np.array([[1, .5], [.5, 1]])

ds = xr.Dataset(dict(dist=(('i', 'j'), dist)))

ds.to_dask_dataframe().query('i >= j').compute()

i j dist

0 0 0 1.0

2 1 0 0.5

3 1 1 1.0It would be great if there was a way to avoid that filter. |

|

Can this be closed now that #306 has been merged? |

I think so. |

A fundamental operation that we want to support is efficiently calculating pairwise distances over a number of different metrics across a number of different computational backends. See the prototype as an example of what we're trying to achieve.

@alimanfoo - I think you had a blog post about this also?

The text was updated successfully, but these errors were encountered: