-

Notifications

You must be signed in to change notification settings - Fork 1k

Search reindex task leaves empty index. #3746

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Me, too. |

|

+1 |

|

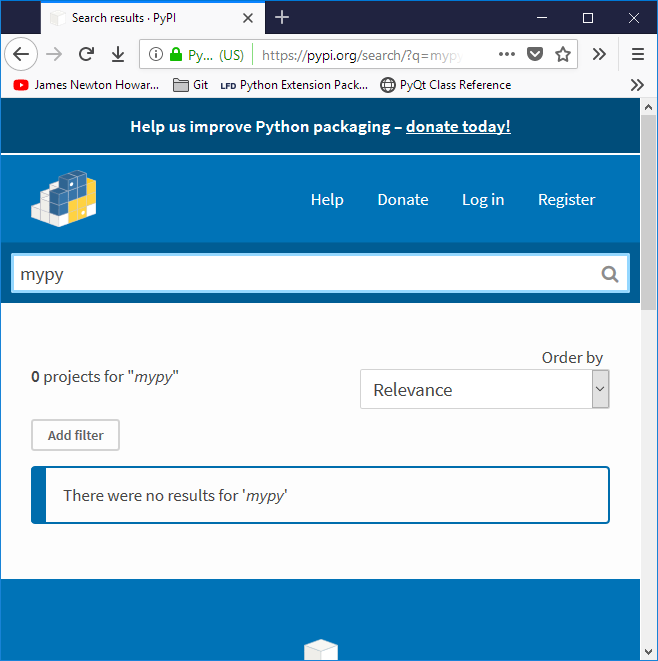

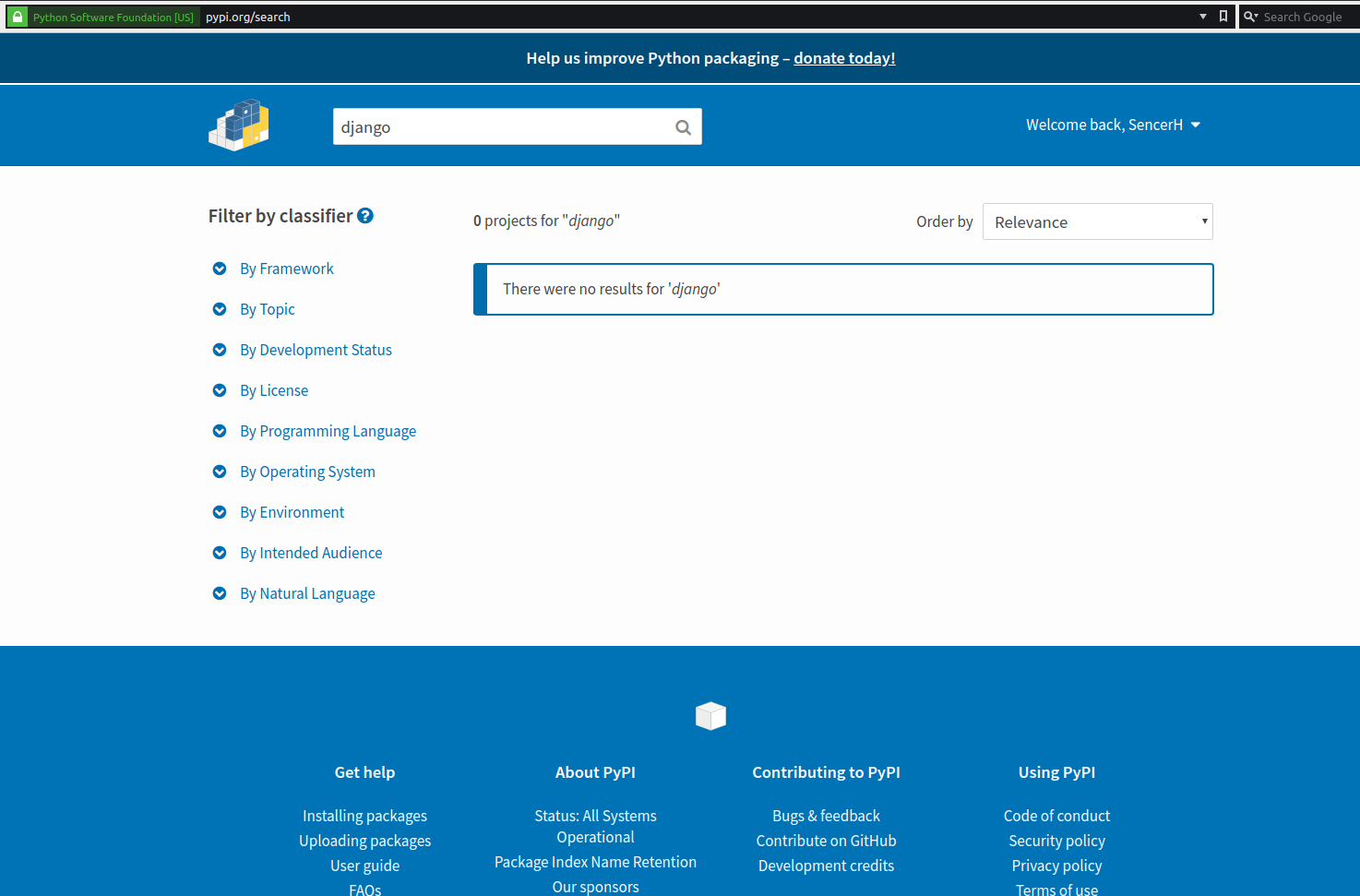

Neither pip 10.0.0 nor pip 9.0.1 showing any results at the moment: |

|

Neither the website search nor pip search returning any results but pip install & pip list -o (which has to query the versions) all seem to be working ok so it looks to be just the search interface. |

|

Same here, |

|

I'm sure people are already working to solve this |

|

Appears to be solved in web and cli (which probaly use the same endpoint) 👍 |

|

We seem to have some kind of issue in the task that runs every 3 hours to update the index. It was aggravated by changes reverted in #3716, but the underlying issue seems to still be in play. Something seems to clearly be going wrong in the "swap" in this code: https://github.com/pypa/warehouse/blob/b463af8aac4c778fe5fd1d7abe6e52c00bd06a13/warehouse/search/tasks.py#L131-L167 |

|

This seems to be related to running the indexing job as a Celery task. I'm unable to reproduce when running the reindex job from CLI, even kicking two of them off "in competition". |

|

Our ElasticSearch cluster has been upgraded to the latest available release in the 5.x series (5.6.9) from a very early release (5.0). This was optimistic, aside from being generally a good idea. Perhaps we were hitting some bug that has been resolved. We also disabled automatic index creation, which may have been leading to the issues encountered leading to #3716. Aside from this one observation: In our handling of the index swap, we do not wait for a "green" status on the new index before swapping the alias and deleting the old index. Perhaps we should? |

|

Occurred again in prod on the last index task. New index being created, grabbed logs to investigate. |

|

State found: So the index job attempted to create the new index, but the result was empty. It nevertheless continued on to delete the previous index and take the alias. |

|

logs: excluded _bulk calls for clarity, but there were plenty of them! comparing to two previous runs: |

|

it seems #3774 may have helped... which leads me to believe some state was being cached by the celery worker... |

|

we've been going steady for 3 days. closing. |

|

The problem is that |

* Make sure data always goes to new index Fixes #3746 * Make celery workers more short lived * Fix tests

The 'Search projects' function does not work for me on https://pypi.org. Irrespective of the query, the search does not return any results. (Example: https://pypi.org/search/?q=numpy)

The text was updated successfully, but these errors were encountered: